Hi all,

From the viewpoint of the end user, the database offload operation is utilized for reading data while Dataminer continues to offload more data, resulting in a locking issue on the remote tables.

The post-processing of information starts at 1 am daily.

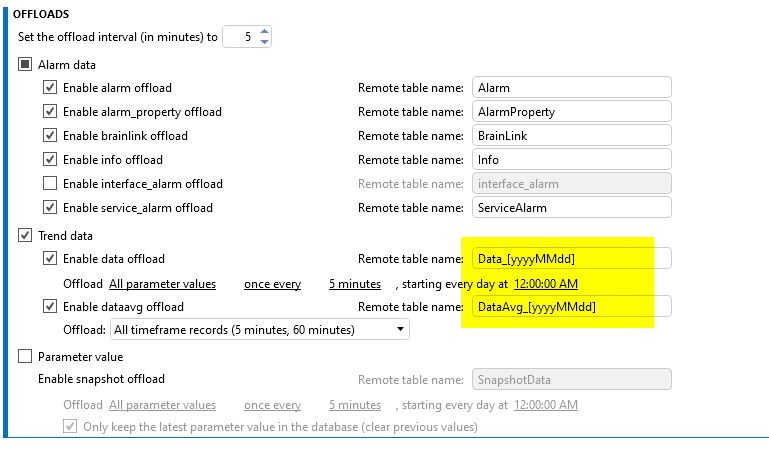

Is it feasible for Dataminer to offload data into a new table each day?

For instance, could we configure a date-time placeholder within the offload settings to enable Dataminer to create a new table for each day?

If Dataminer complies with the above-mentioned, the post processing process will have the table only for reading while Dataminer keeps offloading without any issues.

Thanks.

We currently don’t support that kind of feature. However once the locking action is done pushing the data into the database the reading action can be done again. Adding retry queries could be an option in your application. If you see that too many locks are being hit I would suggest to increase the offload interval of your ‘All parameter values’ once every e.g 10 minutes. This will reduce the points and frequency of points to be offloaded of course you also lose some resolution.

Tuning to get what thencommunity will suggest: could there be any performance issue in the offload DB instead? Or could the logic change so that the information is continuously processed as it comes in, rather than waiting till 1? From system observation, I’ve always thought of the offload as a continuous process (hope I’m not wrong on this) – within the single entry, the time reference will be ‘toa” – would it be viable to tune the reading app to just read from the toa of the last 24h?