Hi community,

I tried to monitor a 3 nodes Cassandra cluster with the “Apache Cassandra Cluster Monitor” driver version 1.0.2.2

The System Checks table shows two warnings for all three nodes:

1. Repair Needed, Warning, “The tables ‘partition_denylist’, ‘view_build_status’ were not repaired within the tombstone removal period. Please increase the gc_grace_seconds or the frequency of the repairs. Repair checks for specific tables can be disabled in the Tables table.”

Cassandra-Reaper is running and blacklistTwcsTables is set to true. So everythings is running in auto mode. What should I do, simply disable the check for this 2 tables?

2. Server Encryption, Warning, When your nodes communicate over a zero trust network, it is best to enable inter-node encryption (server_encryption_options_enabled, server_encryption_options_internode_encryption, server_encryption_options_endpoint_verification). For more information on how to enable it go to below link.

I think inter-node encryption is running fine. What is expected in the config file?

My settings:

server_encryption_options:

internode_encryption: all

optional: false

legacy_ssl_storage_port_enabled: false

keystore: …

keystore_password: …

require_client_auth: false

truststore: …

truststore_password: …

require_endpoint_verification: false

Regarding the 2nd question:

It looks like Dataminer expects require_endpoint_verification = true.

If I set it to true I can only reach each cassandra node locally and got cassandra errors like ” No subject alternative names matching IP address 192.168.2.162 found”. But they are specified in the cert (each keystore contains all 3 nodes with SAN and the rootCA).

How to debug?

I see that this question has been inactive for some time. Do you still need help with this? If not, could you select the answer (using the ✓ icon) to indicate that the question is resolved?

Sorry for the late response.

1. TWCS tables should not be checked against repairs as they should not be repaired as you already mentioned. This is a fix that was done from version 1.0.2.2. The tables that you mentioned are not using TWCS. Those should indeed be repaired. From Reaper system keyspaces are not automatically being added to the schedule, there is an open issue for the system_auth keyspace. So ideally you add those keyspaces manually.

Note: In the meantime we already have a 1.0.2.6 version which also includes important fixes.

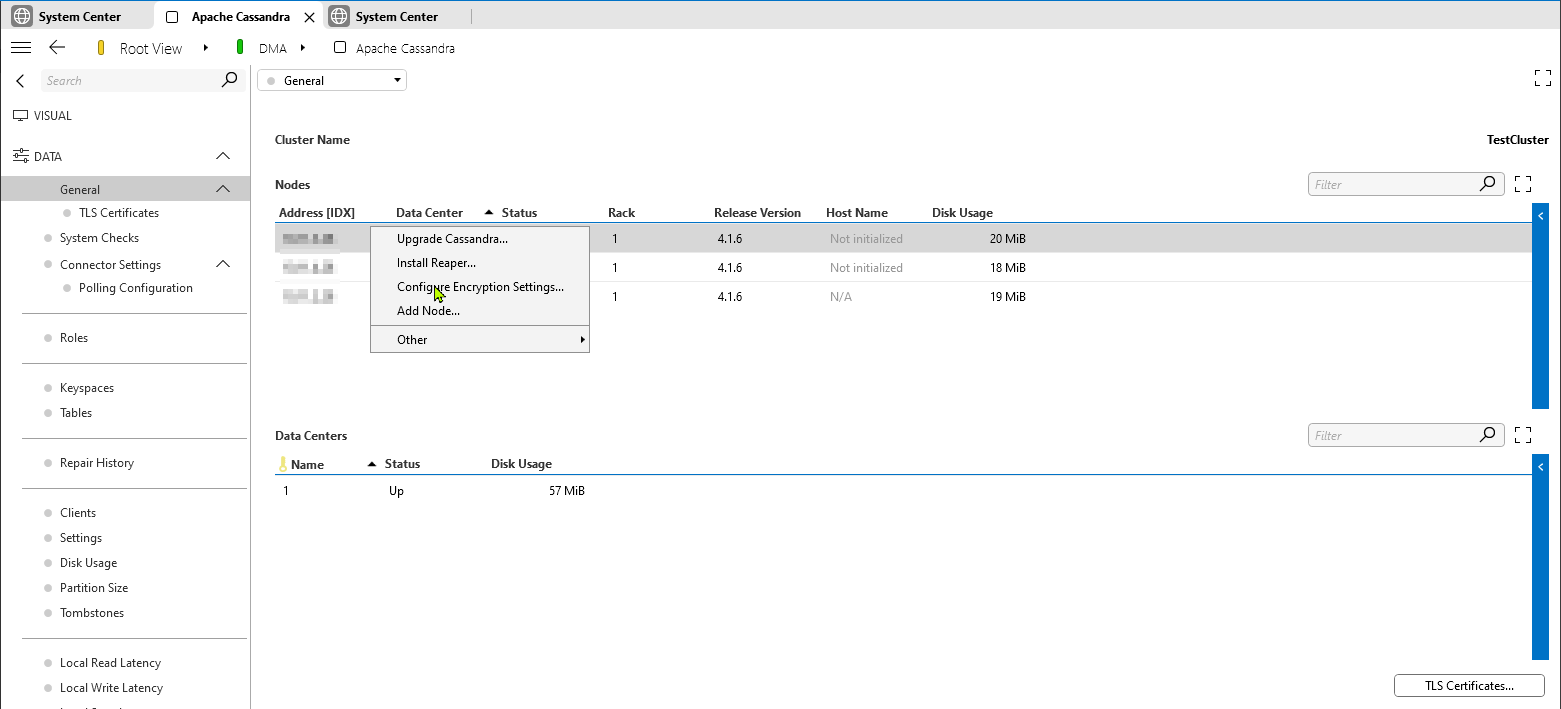

2. In order to enable encryption between nodes and/or between clients of Cassandra, you need certificates. It can be quite challenging to get this right if you have no experience on that. With the Apache Cassandra Installer there is a wizard to guide you through it. Unfortunately there is no documentation yet, but it is in the making. In a nutshell, once the package is deployed you can use the context menu (see below screenshot) in the nodes table to connect with SSH and to do changes on the system. Development of this is still ongoing, so feel free to let me know if you are encounter issues with it.

Hi Michiel, all,

Following on this topic with Reaper TWCS repairs.

My experience is that the Reaper (3.6.1) actually still does repair TWCS tables in schedules even with the blacklistTwcsTables flag set to true.

Reading the documenation

https://cassandra-reaper.io/docs/configuration/reaper_specific/

it's actually designed that way: "This automatic blacklisting is not stored in schedules or repairs. " I believe, this is not desired.

Can you check/confirm same and/or advise how to exclude specific tables from being repaired in Reaper schedule?

Thank you.

EDIT: Ok, I stopped and deleted the schedules manually from Reaper, maybe they were some leftovers. I will monitor in case they re-appear in the schedules. Thanks.

Hi Martin,

Do you know if the blacklistTwcsTables was enabled on all reaper instances (normally reaper is installed as side-car on every Cassandra node)?

Yeah, this I don't know. As far I as remember I set it to true on all nodes. So, it is possible that on one of reaper instances (we have 4 nodes), the blacklistTwcsTables was set to false by default which caused Reaper to add even the TWCS tables to the schedule?

Regardless of the above, it seems Reaper has added the tables to the schedule again, even after my manual deletion, example below.

How does this work for you? Are these tables not auto-added in Reaper schedule?

staging_trend_data_long

Next run November 15, 2024 6:09 PM

Owner auto-scheduling

Nodes

Datacenters

Incremental false

Segment count per node 64

Intensity 0.8999999761581421

Repair threads 1

Segment timeout (mins) 30

Repair parallelism DATACENTER_AWARE

Pause time 2024-11-14T12:13:47Z

Creation time November 14, 2024 12:04 PM

Adaptive false

I would advise to double check 'blacklistTwcsTables' is set enabled on all reaper instances and restart the Reaper service on all nodes to ensure the settings are read from the config file (only at startup done). Then the repair should not be triggered. Maybe it is still in the schedule, but you should no longer see them in the repair history at least.

Thanks. I have excluded the keyspaces manually as hinted here

https://community.dataminer.services/question/repair-settings-for-cassandra-reaper/

We have the exact same issue with Cassandra cluster monitoring showing those two tables as “repair needed”. We have run a nodetool repair -full. Wouldn’t that repair those tables as well?

It will depend on how your cluster looks like, the RF etc. nodetool repair -full will initiate a repair from the node on which you run the command for all token ranges that should be available on that node. So if you have more nodes then you RF, not all data will be repaired running it from one node. Important is that you don’t trigger a repair for the same token range on more than one node at the same time as Cassandra does not handle this well. That is why Cassandra Reaper was created to handle that for you.

Additional question:

Dataminer shows me that one not is not available “Cassandra cluster health is yellow. DataMiner is still fully functional. 1 out of 3 Cassandra nodes are unavailable: ………..:9042.”

But the Cluster monitor driver works fine with this node and I can connect with DevCenter too. What could be wrong?