Hello,

We have many replicated elements in our system and we make use of many DMPs.

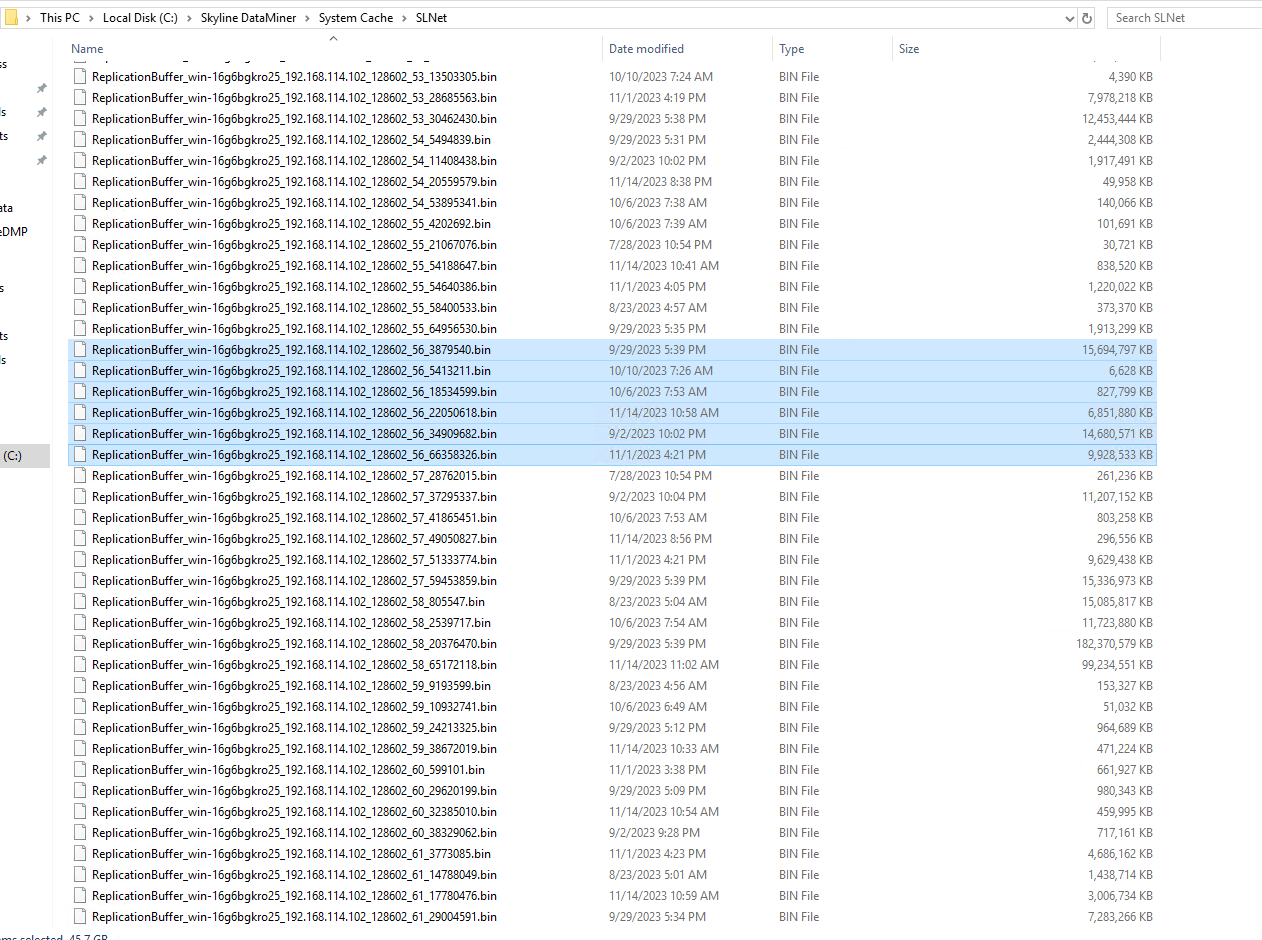

We noticed multiple large files in the folder C:\Skyline DataMiner\System Cache\SLNet :

My goal is to not disable replication buffering; but why is there multiple files for each replicated element here ? And why are they so large in size ?

TIA 🙂

Hi Arun,

I'm not an expert on this matter, so my apologies if my explanation is not fully correct. AFAIK these files (data point updates) are being passed from the source to the destination and after it is placed on the disk of the destination they will be processed. To get more statistics on the data being sent from source to destination you can use the following from the Client Test Tool: Diagnostics -> Caches & Subscriptions -> ReplicationBufferStats

I would assume that this means the info can't be processed fast enough. Depending if you see this on the source or on the destination DMA, it will be sending to the Destination DMA or storing the data in DM/DB