Hi, in a project that we’re working on, DataMiner is being used to replace a legacy fault management system. We have been provided some numbers around alarming that we should expect:

1) the total number of alarms currently being stored and maintained by the legacy fault management system. Most recent number indicates that this is around 25 million alarms (both historical and active) out of which about 10 million are clear alarms.

2) an alarm rate of between 4000 – 12000 on a typical day maxing out at 83,000 on at least one day in the last 3 years.

Can we have some advise around how we can use these numbers to estimate the storage requirements (Elastic and Cassandra)?

We would also like some guidance around whether the rate of alarms provided is within the limits of what DataMiner has been validated to handle.

Hi Bing,

Here are some alarm metrics i have from a production system which has been running for approx. 10 months on the architecture where the DataMiner cluster (6 nodes – Windows Server OS), the elasticsearch cluster (3 nodes, Red hat Linux) and cassandra cluster (3 nodes, Red hat Linux) is hosted in a vm environment where all of the nodes have their independent VM guest image:

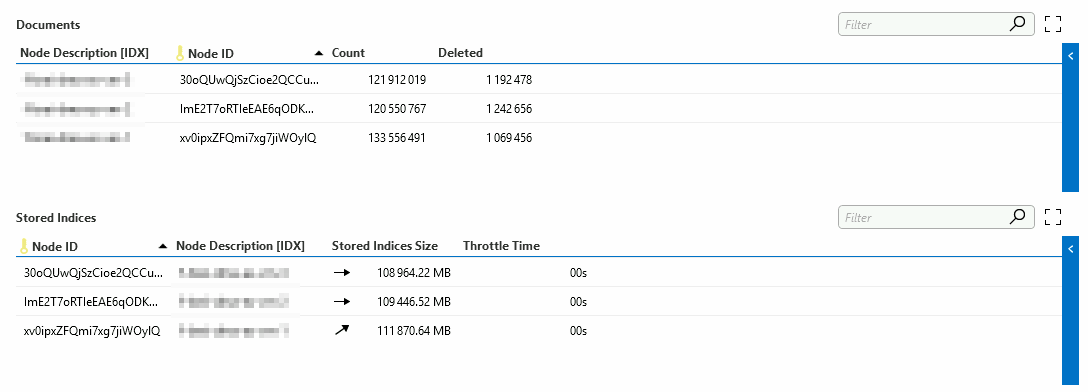

Currently the elasticsearch cluster is holding approx. 176.000.000 unique documents. (a document compares to a row in a table based database) this is resulting in a total dataset size of approx. 330 GB’s which is spread over 3 nodes, so each node is approx holding 110 GB’s of data currently on disk.

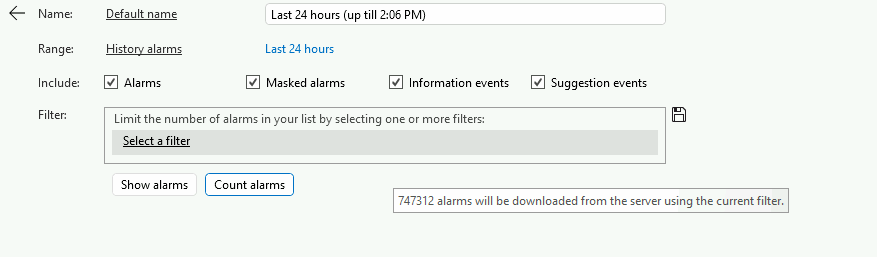

There’s quite a bit of alarm and info event activity on this particular platform. A last 24hr count of all activity tells me that there were 747312 alarm records / documents added:

The total stored index size on the cluster currently increases with approx. 1.7 GB’s a day. which translates in an increase per node of approx. 600 MB’s a day.

Hi Bing,

Did you give the node calculator a try already?

It should be able to give you a general sense of what will be required.

Not only database-wise, but also the number of dataminer nodes you will need, based on the number of elements, expected trending and alarming.

Hi Ive, yes I did look at the calculator. The inputs requires a number of ‘alarm updates’ which is a stat that we do not have. We do have new alarms raised per day but I think ‘alarm updates’ involves more than just new alarms.

Secondly I think the calculator assumes a fixed retention period which is currently not adjustable.

Given that we know the number of alarms that we have in the legacy system’s database (which is over a period of a number of years), I’m looking for a way to translate these into what it means in terms of Elastic DB and Cassandra DB storage size in DataMiner System. I’m thinking this method would give us a better estimate on what we’re looking at over a number of years.

Taking the example provided by Jeroen 747,312 alarms resulting in 1.7 GB implies a per alarm size of 2,443 Bytes.

Would it make sense to use this per alarm size to calculate the Elastic and Cassandra storage or is this over-simplification of the actual situation?:

– Cassandra storage = (total historical alarms + clear alarms) * 2,443 Bytes

– Elastic storage = total current alams * 2,443 Bytes

Are there any other considerations do we need to factor in (other than replication factor)?

Hi Jeroen, thanks for sharing what is being observed from an actual production system. It appears that the 6-node DMS is capable of handling ~750K alarms per day. That averages out to about ~125K alarms per day per node which is above the alarm rates in the scenario I provided.