Hi all,

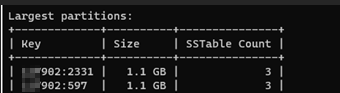

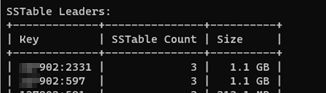

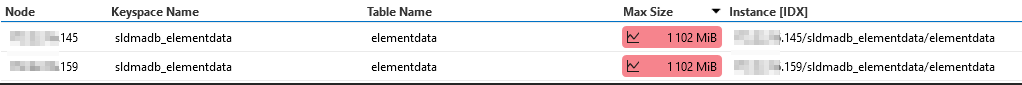

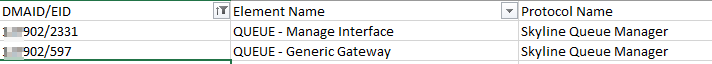

We have these 2 elements in our DMA with large partitions :

The Skyline Queue Manager elements are used by our provisioning solution to pass tokens and associated data from one process to the next. From visually observing the Queue elements, they do not display large tables. It is noticed that during the provisioning process, there can be many tokens being generated. The tokens are displayed in the Queue element’s Incoming Tokens queue which then moves to the Outgoing table then the Completed table. But after the provisioning process, these tables are empty as they are processed. So

1. What data is actually being held within these partitions that correspond to the queue elements and are they required to persist across different provisioning runs?

2. What is the impact of these elements having large partitions and what can be done to reduce the size of these partitions?

Thank you in advance.

I had a quick look at this connector. It is used to keep track of tokens in PA. The 1.0.1.X range is saving all (incoming, outgoing, completed) tokens in tables. When a table has saved columns (PK and DK columns are saved by default unless you use the volatile option), the data is pushed to DB. So that when an element restarts the data is still there. This data is stored in Cassandra. Without going too deep into the details, Cassandra will keep track of all manipulations on the table, so it could be that the partition is larger than the actual data. This is to ensure if you have multiple nodes in Cassandra that everyone can keep track of what happened. (For example, if you send a delete of a row while one of the Cassandra nodes is down, you can tell it when it comes back up that data is removed at a later stage than when that node has it as an insert). So if you have a table in a connector that is not volatile and you have some columns that are saved that change a lot you can have potentially large partitions. This is something you should avoid as large partitions will result in a lot of mem/cpu usage.

This is why in the new range 1.0.2.X of the connector they removed those saved tables and they made a single volatile table with active tokens. So I would advise to see if you can upgrade the Process Automation solution to a later version that fixes this problem.