Hello Dojo community,

I’m currently working on automating a process within DataMiner that involves handling ZIP files within the Documents module. Here’s a concise overview of my objective:

- ZIP files are manually stored in a specific folder within the Documents module.

- Automate the transfer of the updated ZIP file location into an element table by adding a new row (contents of the zip folder do not need to be read).

- Automate the activation of the associated ZIP file location for the corresponding row in the element table. Currently whenever a new file location row is manually added to the table, the file is set as inactive, which needs to be activated ( this inactivates the previous uploaded file location)

I aim to achieve this process by executing a C# automation script. I am not sure how to retrieve the the file location from the documents folder as the files there do not have a parameter ID to use Getparameter methods.

Any specific methods, code snippets, or examples related to importing the zip file location, updating the element table, and activating the file within this automation task would be greatly appreciated.

Fig: element table preview

Thank you in advance for your assistance!

Regards,

ABM

Hi,

If I understood correctly you need to know how to read the contents of a directory where the ZIP file will be stored, and then using the name of the files, add rows to an element that will store these files.

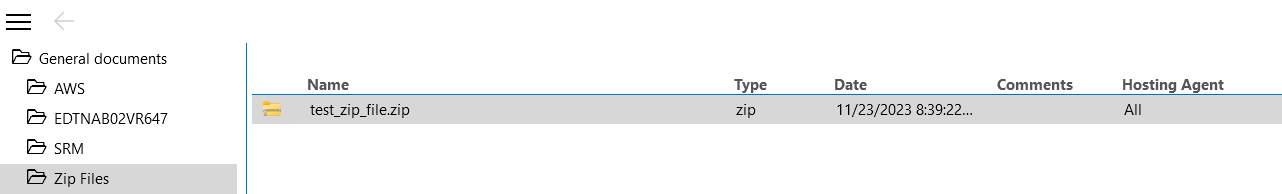

When you add files using the Documents module in Cube, most probably you will use the common folder and create a subfolder to put the zip files on it. For example, I created a folder called Zip Files within the General Documents folder:

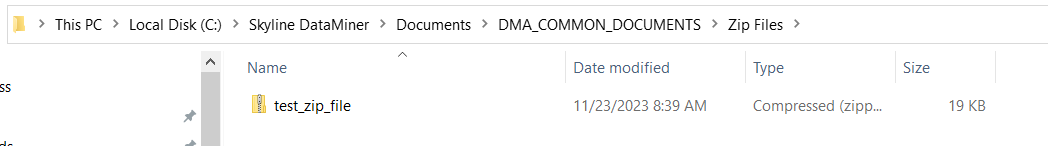

I then uploaded a test file test_zip_file.zip. As a result, on the DMA server, the following directory was added to contain the test file:

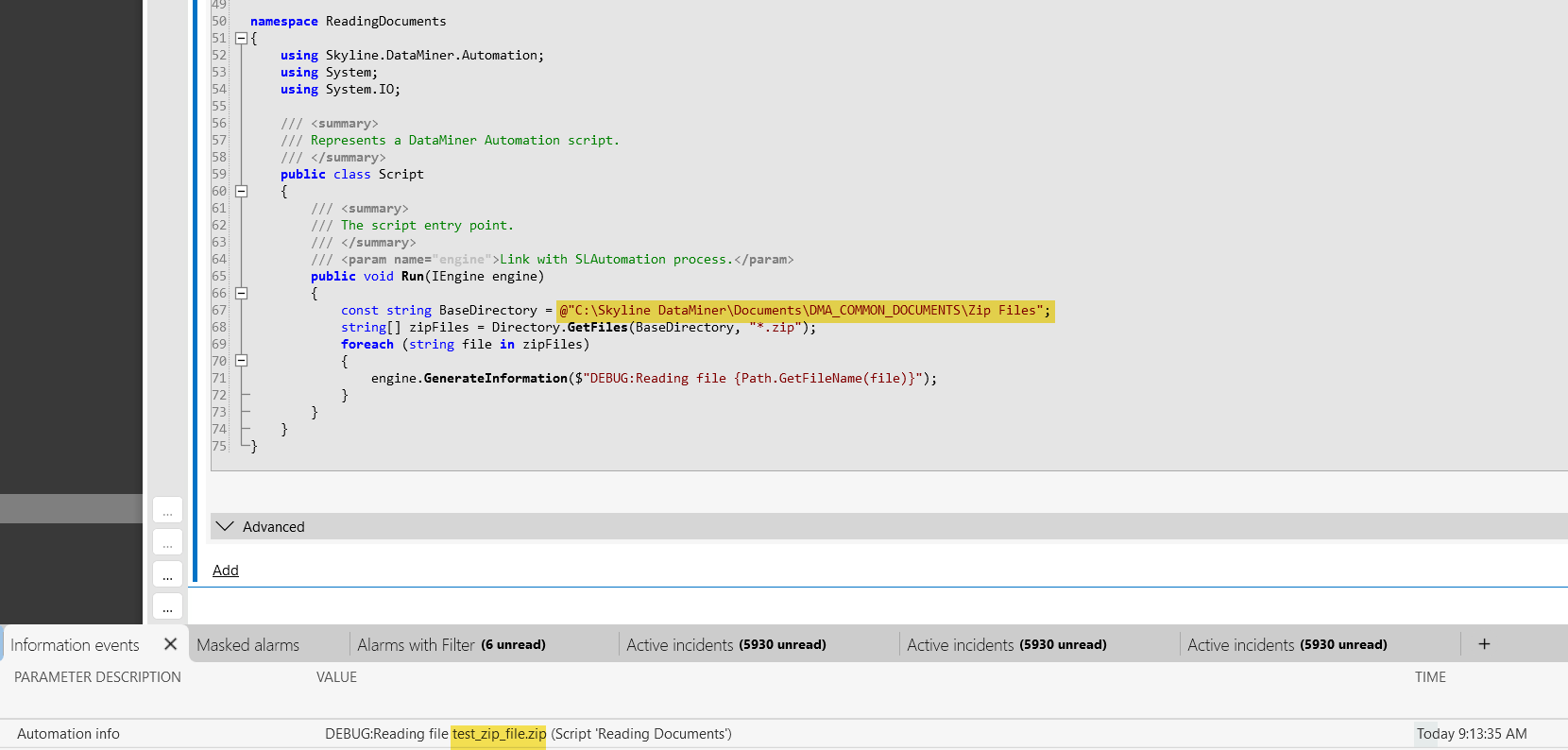

You can then use an automation script to list the contents of the designated folder, extracting the name of each found file and using it to modify the element. Because I don’t know the protocol you are using, the sample automation script is only reading the files and printing the name on the information console:

From the loop, you can for instance validate if the file has been added to the table to avoid duplicates, as well as implementing other logic to react accordingly.

Note that that the logic can be implemented directly on the protocol, so the element can check periodically a target folder for new entries, I would suggest getting in contact with your designated TAM to discuss the different alternatives to your use case.

Finally, I noticed that you posted this other question a few days ago, very similar to this one.

Hi,

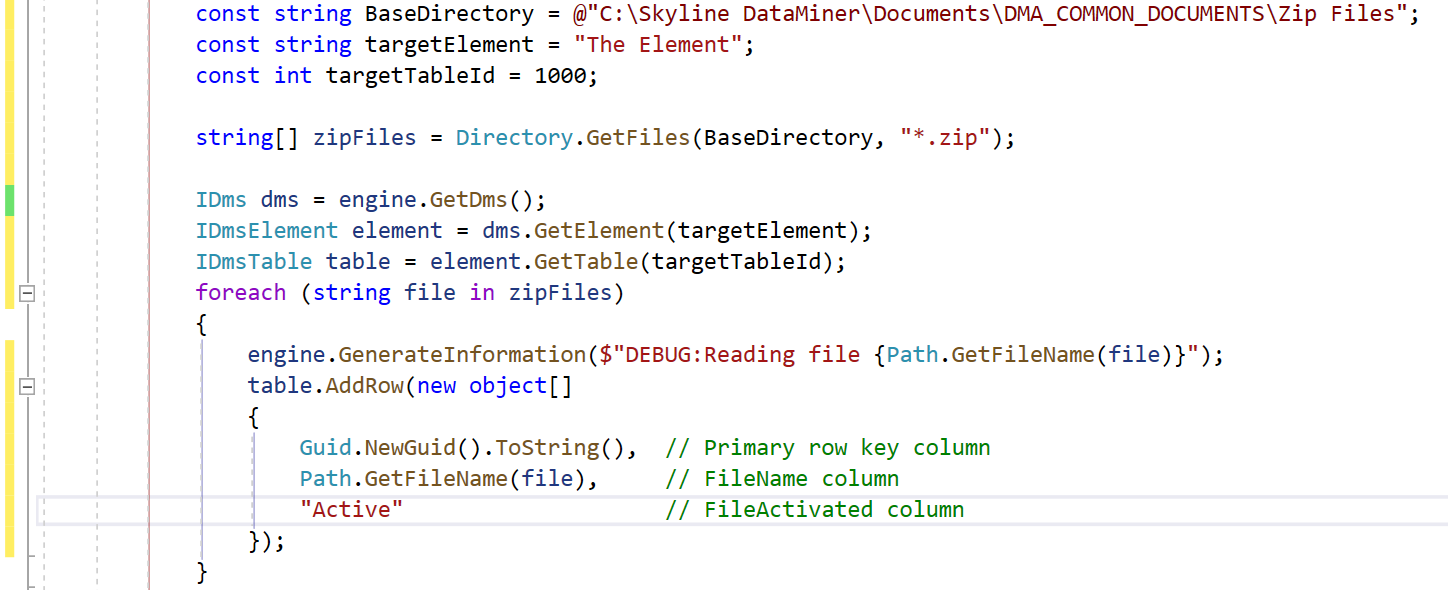

In continuation with your comment above, it seems that you may need to use our DataMiner Core Automation NuGet package to get a reference of an IDmsTable and then add new rows when reading the contents of the base folder:

Dear Gelber,

I appreciate your previous guidance. I aim to import the file location from the DM common documents module. However, I’m looking to enhance the automation script further. The current code snippet displays the file location in the Info event console, yet my goal is to add this information as a new row in a specific table within the element. This table comprises two columns: “file location” and “file activated” (with statuses ‘inactive’ and ‘active’) as per the table protocol outlined below:

—————————*

FileActivated

File Activated(FileActivatedn)

write

string

other

next param

<!–string–>

discreet

Active

Active

Inactive

Inactive

true

—————————*

Additionally, after adding the new row , I want to activate the associated ZIP file (changing from ‘inactive’ to ‘active’). The ‘FileActivated’ parameter’s protocol indicates it is a string type, and I wonder if invoking zipfilelocation.Activate() would suffice or if I should utilize SetParameter.

I acknowledge that adapting the protocol might simplify this process. However, I’m keen on using an automation script triggered each time a user presses the execute button.

Thank you for your assistance in addressing these concerns.