User info

| First name | Rene |

| Last name | De Posada |

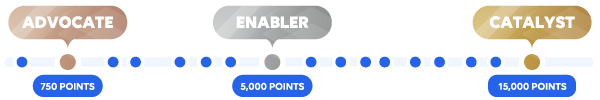

DevOps Program

| Acquired rank |

Advocate

|

| Points progress |

39

DevOps Points

|

Here are a few tips to level up your DevOps game and unlock an arsenal of perks and benefits. Here are a few tips to level up your DevOps game and unlock an arsenal of perks and benefits.

|

|

| DevOps attestation | Request your attestation ID and expiry date |

Achievements

|

|

Questions asked

Answers given

A custom command that returns the most important health KPIs of my DMS at any given moment: Tracking the health of a DataMiner System | DataMiner Docs

View QuestionHi Adama, Not sure which item you are trying to recover but here is some information regarding the restoration of certain DataMiner components. Recycle bin | DataMiner Docs Backup - Using the recycle...

View QuestionIt is possible to execute a script from a driver. To do so, call the ExecuteScript method as specified in the connector development user guide (Link).

View QuestionRebecca, While the number of columns is very flexible, there are limitations linked to the maximum number of parameters (see Limits) that a single DMA can host. In the case of tables, each cell counts...

View QuestionBing, The reason you are not able to see the DataMiner IDP Connectivity element in your alarm filters (see Link) is because this element is hidden by default since a few IDP releases ago. To show this...

View QuestionHi David, Below is an example on how to achieve the results you are looking for. Using a Microsoft Platform element Configure a GQI query using the "Get alarms" data source Filter by Element name...

View QuestionHi Jeff, Please see some answers below. See answers here: (https://docs.dataminer.services/user-guide/Advanced_Functionality/DataMiner_Agents/Installing_a_DMA/Installing_DM_using_the_DM_Installer.html#custom-dataminer-installation)...

View QuestionHi Joe, Could you try adjusting the custom bindings following the specifications for the element properties (Syntax of OIDs referring to properties)? The OIDs shown above appear to be missing parts of...

View QuestionPhilip, DataMiner 10.0.13 was the last feature release after which 10.1.0 main release became generally available. Please, also see DataMiner Main Release vs. Feature Release | DataMiner Docs. With the...

View QuestionRoger, This is a perfect use case for DataMiner and the architecture is rather flexible. Please see below for a common approach. DataMiner receives trap or generates native event/alarm DataMiner...

View QuestionHi Jens, Expanding on Edson's response, for as long as the connectivity script has access to the DCF configuration (we have used flat files in the past, but if access to DOM is possible then that would...

View QuestionPaul, DataMiner Cube is only supported on Windows. From a Mac OS or any other operating system, you can access the DataMiner functionality using a standard browser via the DataMiner web applications....

View QuestionHi Stefan, Another alternative could be to create a dashboard and use the native query filter to search across all elements running a specific protocol. Step 1: Create a query that fetches the target...

View QuestionBruno, Time to live is all about data retention. Depending on the DataMiner version, these settings may be found in different locations. I will try to explain using the latest feature release as reference...

View QuestionJavier, If I understand your question, correctly, you are looking to perform the lcoal offload every 20 minutes but want the actual forwarding to happen every 30 minutes. For this, you'd need to to specify...

View QuestionHi, Yes, it is possible to display the list of alarms associated to a group (e.g., under a view) of DataMiner entities in the web apps. Using the alarm component (Alarm table | DataMiner Docs), it...

View QuestionHi Dennis, The inter DMA synchronization is handled by the SLDMS process. You can find an overview of DataMiner processes here (Link). For more information on synchronization, I recommend the following...

View QuestionHi Tser, DataMiner already integrates with devices (IoT) using MQTT. You can use readily available Nuget packages such as this (MQTTNet) to write a DataMiner connector (protocol, driver) to bring data...

View QuestionHi Steve, This is possible by passing the information in the dashboard URL. Please see [Specifying data input in a dashboard URL | DataMiner Docs]. Thanks,

View QuestionHi Steve, The cloud team is working diligently to bring relevant information associated with packages directly to the Catalog. However, at the current moment, this information could be found in multiple...

View QuestionJesus, A DCF connection is just a link between two known interfaces, in your case between devices. Therefore, having both devices confirming the relations is always the preferred approach. Having said...

View QuestionCristel, Could it be that the element is actually hidden and not deleted? Hidden elements | DataMiner Docs If for some reason, the element went into a rogue state, you could try using the client tool...

View QuestionDario, View tables are normally used to bring data from actual tables into a unified view. This means that the alarming should be applied against the actual parameters holding the data. If you enable...

View QuestionYvan, For security reason we always recommend to use HTTPS when accessing DataMiner via a web browser, and if the correct settings have been applied (Setting up HTTPS on a DMA | DataMiner Docs) you should...

View QuestionDepending on how you are implementing the connection (standard or via QAction), I'd say you can either disable timeouts for the specific connections or use group conditions to not even execute the sessions...

View QuestionSebastian - Things you could try: Use a service protocol on your services and have a parameter with the name of the service parent view Name your services in a way that you can identify them by...

View QuestionJeff, This has the potential of creating problems even for Cassandra. At such high frequency, we have seen that a a large number of tombstones (unprocessed records) start accumulating in Cassandra, which...

View QuestionWale, It seems not possible to do this for all elements in the system using GQI. Using the Get Parameter By ID gives you access to the Created Property but only for a single element. However, you could...

View QuestionAlberto, The export to CSV file has limited information and it is not the intention (or secure) to handle credentials via this operation. For your use case targeting SNMPv3 I would recommend using the...

View QuestionFor this use case, the Pivot table would be more than enough. You can use the parameter feed and pass the parameters you need to the table. You could, also, add avg, min, and max to the results. Showing...

View QuestionHi Roger, Based on your description, it seems you are needing one of the deployments methods without Automatic updates. You can find the various options here: DataMiner Cube deployment methods | DataMiner...

View QuestionHi Paul, There are a few considerations when setting up the anomaly detection, which might be causing your configuration not to render the expected results. Below are some of them: Ensure that the...

View QuestionHi Shawn, Just tested against 10.3.12.0 and see the same behavior. This seems to me as a design choice as the masked alarm is still linked to the view. Moving the element out of the view resets the latch...

View QuestionHi Samson, If you are using an indexing engine (Elastic, etc..), this post provides an explanation as to how to go about estimating the memory space alarms and information events could take on a system...

View QuestionAlberto, You can configure Cube to only use polling see (Eventing or polling | DataMiner Docs). This is less performant but should align with what you are trying to achieve if I understand correctly?

View QuestionHi Stacey, The migration to Cassandra procedure can be found here (Migrating the general database to Cassandra | DataMiner Docs). To install Cassandra (Linux is now recommended as SO): Installing Cassandra...

View QuestionTiago, If you are looking to load large number of alarms into the dashboard, I'd recommend using GQI (Link) and the table component (Table | DataMiner Docs). This combination allows you to make use of...

View QuestionTobias, Most, if not all, of the tasks mentioned above (correlations are tricky (see https://community.dataminer.services/question/mechanism-to-export-correlation-rules/)) should be possible via automation....

View QuestionNaveendran, Block storage is used by instances/VMs for consistent data storing and this is what should be used for the standard DataMiner, Cassandra, and Elastic installations. File/object storage such...

View QuestionJarno, I don't see any reason to drift away from the general recommendations of 30-50 ms (https://community.dataminer.services/dataminer-compute-requirements/). Thank you,

View QuestionBernard, An option would be to keep the rows in the table for certain period of time after they are not being returned and use a column to mark the row accordingly (e.g., Present or Removed), and then...

View QuestionAnother option could be to enable data offload via file export for the specific parameters. This will allow you already get the offloaded data in in CSV files following the specified data resolution....

View QuestionChristhiam, Looking at the numbers as presented above, the node calculation seems reliable and it should cover your numbers safely. The multi-cluster approach is the recommended architecture, and indeed,...

View QuestionAlberto, The situation you explain is very common for connectors that target a specific product line. The bottom line is that as vendors evolve their offerings, APIs, MIBs, etc., also evolve to accommodate...

View QuestionFlavio, This should be possible by implementing the appropriate logic within the service definition connector, since there are, already, calls that could be used to retrieve the properties associated...

View QuestionJochen, It is possible to integrate with JAVA using .Net and we have done this in the past by converting the native JAR libraries into DLLs that can be used by DataMiner connectors. The tool we have used...

View QuestionRobin, As specified in the connector development user guide (Link), you can have access to all service parameters using the Engine methods. A snippet of code would look like this: Thank you,

View QuestionThis use case can be achieved using the Dashboards app QGI or Pivot table component. Using Pivot table Configure the component to report on one or more parameters Enable trend statistics A CSV...

View QuestionHi Daniel, If you are trying to get data from the actual elements linked to the service using the service as reference, it is currently not possible via the query UI. I can think of a couple of options...

View QuestionHi Dennis, As explained in this tutorial (Automatically detect anomalies with DataMiner), it is recommended that at least one week of data is present, and that both real time and average trending are...

View QuestionHi Henri, As stated in the documentation [Link], the user settings are stored in the DataMiner server, so if you have admin access to the system and if your IT policies allow it, you could try copying...

View QuestionHi Philip, Based on your description where you are currently running Cassandra and DataMiner on the same machine without indexing, you could move into any configuration described here (Separate Cassandra...

View QuestionHi Ciprian, The indexing engine (Configuring an indexing database | DataMiner Docs) is necessary if specific features (DOM, SRM, UDAs, specific GQI data sources) will be used in combination with LCAs....

View QuestionJust updating the post with the results of our investigation. As it turns out the alarms mentioned in the original post had been generated already prior to the introduction of the RCA links, which mean...

View QuestionHi Kristopher, With the pivot table (Pivot table | DataMiner Docs) in dashboards, you can easily achieve this behavior. Below you see an example where the Avg, Min and Max are shown for the Total Processor...

View QuestionMichiel, Hard to tell where the issue may be with history sets, but below are some possibilities that we have seen impacting history sets: Could it be that some data points coincide with the exception...

View QuestionBruno - the storage architecture for distributed systems continues to be the same (Recommended setup: DataMiner, Cassandra, and Elasticsearch hosted on dedicated machines, with a minimum of three Elasticsearch...

View QuestionRandy - Could you try applying the conditions to the Configure Indices section instead of the Configure Elements and see if it works that way?

View QuestionPaul, Multithreading could also be a viable option for the use case above since the tasks executed by the QActions are logically decoupled. Multi-threading | DataMiner Docs

View QuestionWil, Redundant connections are leveraged as described here: Redundant polling | DataMiner Docs Other useful sources are: PortSettings element | DataMiner Docs Type element | DataMiner Docs Session...

View QuestionBernard - This is not currently possible in the way you present it here. However, you can make use of custom properties to play with a custom parameter description.

View QuestionRyan, While the time zone where the servers are located does not matter, it is recommended that all servers in the cluster (DataMiner, general database, and indexing engine) are using a synchronized time...

View QuestionDaniel, Q1.A: it does not seem possible to show the legend in any other place other than on the bottom of the graph. Note that you could decide to collapse the legend by default. Q2.A: it does not seem...

View QuestionWe were recently asked to provide something similar and a workable solution would be to use the information events. Every time a user logs in/out of DataMiner an associated information event is logged....

View QuestionStijn, If SLNET was not running, DataMiner was not operational. If this happened during the upgrade attempt, the upgrade shouldn't have been successful either. To determine what could have caused the...

View QuestionJavier, As specified in the DataMiner User Guide (Configuration of DataMiner Processes) it is possible to configure how many of this processes run within each DataMiner Agent. However, please, keep in...

View QuestionThis use case could be achieved via the Dashboards Alarm component (see DataMiner Dashboards for more on this app), which could be set up to include only timeout events and further refined to any specific...

View Question