We have a situation where our service and service level view in Dataminer Cube is showing in critical alert when there are no active alerts present for the service in Alarm Console anymore. To get out of this state, we have to clear the latch state on the corresponding element.

Dataminer version - 10.0.9.0-9385-CU1

Client Version - 10.0.2026.2800

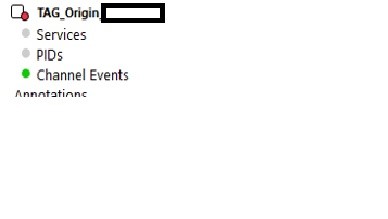

We have numerous services created all associated with the same group of elements. Each service is separated by different filter criteria from the elements tables. Alarm templates and correlation rules are associated with the elements parameters. When watching the system, we see alerts come and clear as expected (from element directly and correlation rule setup) with the service level severity correlated to active alarms . Sporadically, however, we are now seeing a service show a raised alarm state (critical) even though all the associated alarms have cleared. When Surveyor is expanded to the service element level we are able to identify the element (always associated with the TAG element so far) in alarm, though when the service element tree is expanded, the service associated tables/parameters show normal (image below alerts associated with Channel Events table showing normal).

At the moment, to get out of this state, we have found resetting the element latch state clears the service level severity.

What I need now are the next steps in troubleshooting the issue?

Or is this a known issue already being worked on?

There are several routes you can take to investigate this. But one of the main things is to check if there are no Run Time Errors or other problems at the moment the alarm gets "sticky".

You can find out the moment it got stuck by looking at the reporter timeline for each component. Comparing when all elements are cleared together with the time that the service doesn't get cleared should give you an indication when the service failed to clear the critical element.

Knowing this timestamp you can look at the SLWatchdog2.txt to find out if one of the DataMiner agents had an open RTE at that time. Every service also has its own log file where you can follow the alarm status.

If there were no issues, you could still look what is special about the alarms that get stuck. E.g. in the past we had a case if you had a clear and alarm incoming in the same second, due to a race condition the clear was sometimes incorrectly parsed before the alarm which made the critical alarm to be stuck.

Marlies, thanks for the comments. The issue resolved itself when the service with the latched alarm was removed (as not needed anymore in the system). Since removing this one service, no other services have experienced the latched alarm issue. If we see this occur again, I will follow your steps to help identify and get more information.