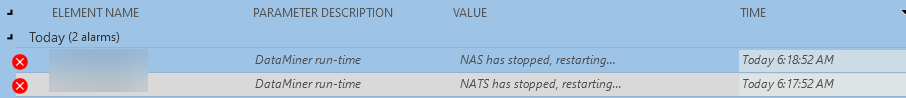

Our client has a cluster running 10.2.0.0-11774-CU3. It was upgraded a few weeks ago and other than a hardware issue has been stable. Last night we saw two errors that indicated both NATS and NAS restarted. Both errors dropped after about two minutes which I assume was the length of time it took for the services to restart.

After the restart everything appears normal. Looking at the trending for that server, I don't see any evidence of a memory leak or that the CPU is/was stressed. I did see this in the SLNATSCustodian log:

2022/07/06 06:13:14.728|SLNet.exe|HandleCustomMessage|ERR|0|266|(Code: 0x800402B8) Skyline.DataMiner.Net.Exceptions.DataMinerCommunicationException: Failed to connect to 100.x.x.88: Timed out (10s)

at Skyline.DataMiner.Net.DataMinerConnection.GetConnection()

at Skyline.DataMiner.Net.DataMinerConnection.HandleMessage(DMSMessage msg)

at Skyline.DataMiner.Net.Apps.NATSCustodian.NatsRoutesArbiterHelpers.GetCredentialsBytes(NatsNode seed)

at Skyline.DataMiner.Net.Apps.NATSCustodian.NATSCustodianMessageHandler.InnerHandle(IConnectionInfo info, NatsCustodianForwardCredentialsRequest message)

at System.Dynamic.UpdateDelegates.UpdateAndExecute3[T0,T1,T2,TRet](CallSite site, T0 arg0, T1 arg1, T2 arg2)

at Skyline.DataMiner.Net.Apps.NATSCustodian.NATSCustodianMessageHandler.HandleMessage(OperationMeta meta, IManagerStoreCustomRequest request)

at Skyline.DataMiner.Net.ManagerStore.CustomComponent.HandleMessage(IConnectionInfo connInfo, IManagerStoreCustomRequest request)

at Skyline.DataMiner.Net.ManagerStore.BaseManager.HandleCustomMessage(IConnectionInfo connInfo, IManagerStoreCustomRequest request)

HandleCustomMessage

2022/07/06 06:16:42.089|SLNet.exe|ResetNATSClusterIfChangesAreFound|INF|0|22|Reconfiguring local Nats because: SLCloud.xml contains unreachable NatsServer(s) (5 mins) and nats-server.config is misconfigured

2022/07/06 06:17:42.131|SLNet.exe|ResetNATSClusterIfChangesAreFound|INF|0|269|Reconfiguring local Nats because: Nats Service is not running

2022/07/06 06:18:41.867|SLNet.exe|ResetNATSClusterIfChangesAreFound|INF|0|14|Reconfiguring local Nats because: SLCloud.xml is missing new NatsServer(s) and nats-server.config is misconfigured

2022/07/06 07:00:42.345|SLNet.exe|ResetNATSClusterIfChangesAreFound|INF|0|101|Reconfiguring local Nats because: SLCloud.xml contains unreachable NatsServer(s) (5 mins) and nats-server.config is misconfigured

2022/07/06 07:11:42.354|SLNet.exe|ResetNATSClusterIfChangesAreFound|INF|0|23|Reconfiguring local Nats because: SLCloud.xml is missing new NatsServer(s) and nats-server.config is misconfigured

I hid part of the IP address, but the IP in the message: "Failed to connect to 100.x.x.88" is the IP of the server itself.

So my question is this... given that everything looks OK now, is there any further action that needs to be taken here to either clean up or prevent a re-occurrence? Or, after the restart of the services, has the DMA self-healed?

Thanks!

Hi Jens… there are 4 failover pairs in the cluster, but only one of them had the issue. Come to think of it, this is the same system you helped us with a few weeks ago, so maybe my initial statement about there being no previous issues was inaccurate. I had sort of blocked that issue out! 🙂 IRC, that issue was also related to NATS, but in that case it was a memory leak. In this case there was no evidence of strain on the memory or CPU.

OH, and related to the previous issue we experienced, that was on a different agent in the cluster.

Hi Jamie,

It's very possible this was a one-time issue and will not re-occur, but it's also possible the root cause is a deeper issue (e.g. connection or network issues in the DataMiner System). When SLWatchDog detects NATS is stopped it will automatically restart the services in an effort to self-heal, this will also create the alarms you showed.

That being said, NATS has been a source of issues lately and a lot of bug fixing has been going on there. It's very possible this issue is already fixed in a later version.

I'd suggest keeping a close eye on these error alarms to see if it keeps happening, if it does we will need to perform more troubleshooting to identify the exact root cause. If you see more NATS issues, please refer to Investigating_NATS_Issues.

I hope this somewhat helps.

Best regards,

Jens

Thanks Jens! We’ll keep an eye on it. I will also look over the NATS investigation link you posted and see what I find on the DMA. In any event, it’s good to have it as a reference for future occurrences. I figured it could be a random occurrence, but thought it wise to put the question out to Dojo anyway.

How many agents are in this cluster? Does it have failover agents?