Hello Dojo,

I currently have a timer of type IP structered as so:

<Timer id="11" options="ip:1600,3;each:1800000;pollingrate:15,3,3;threadPool:300,5,306,307,308,309,310,30000;ping:rttColumn=33,size=0,ttl=250,timeout=250,type=winsock,continueSNMPOnTimeout=false,jitterColumn=34,packetLossRateColumn=36,latencyColumn=35,amountPacketsPID=311,amountPacketsMeasurementsPID=312,excludeWorstResultsPID=313">

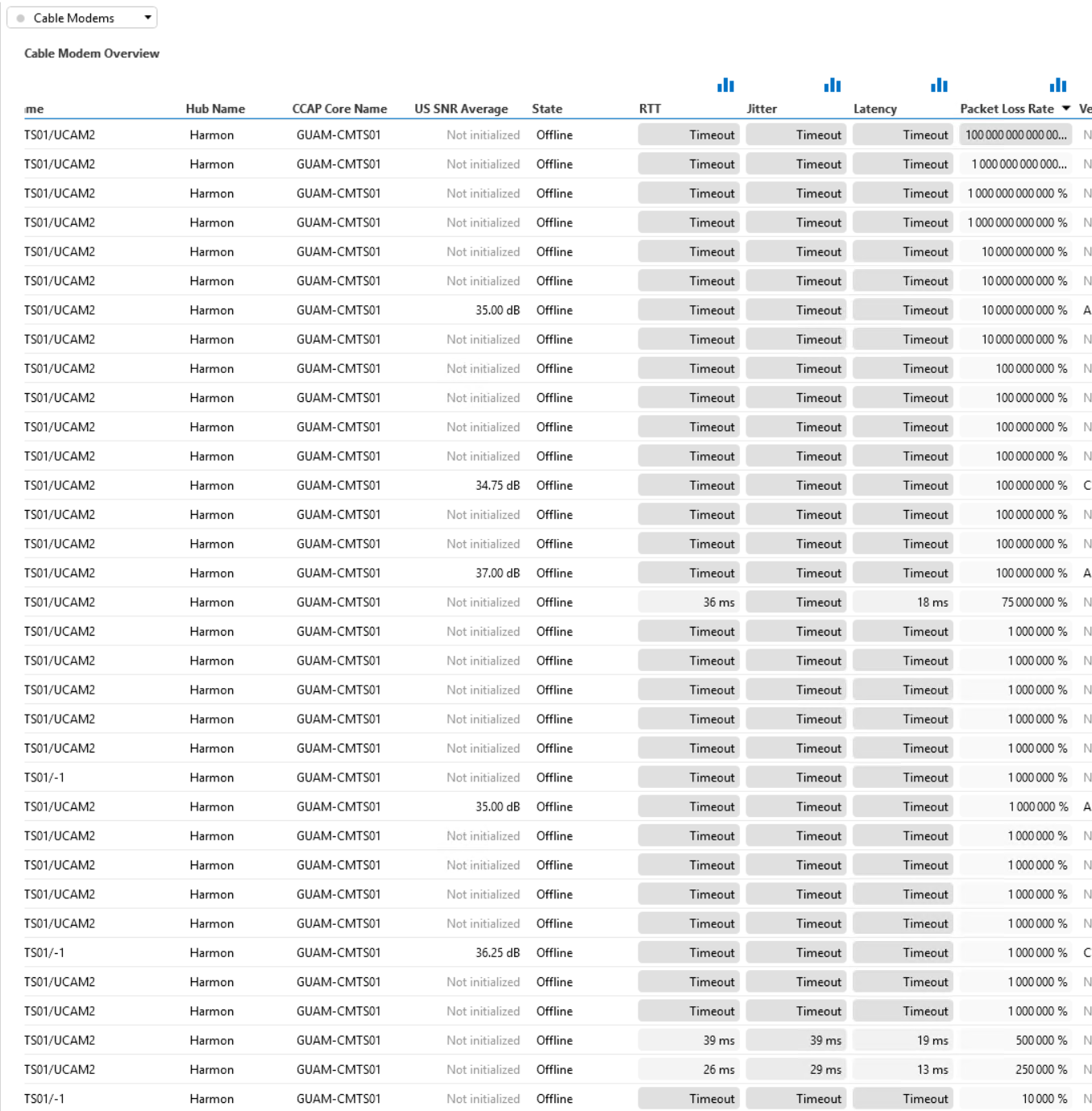

and it seems as if there is an issue with the calculations for the packetLossRateColumn calculation as shown below:

Is there a way to prevent this overflow? The column already has a range of 0 - 100.

Thank you.

Hi,

Investigation of the driver shows that there is a sequence present on the parameter to be able to show the value of the packet loss rate correct.

There is a QAction that performs a protocol.GetRow call. It is modifying some items on that object and then performs a protocol.SetRow call. But that object contains a value for the packet loss rate parameter that was filled in due to the GetRow call, on which a sequence is then being applied when setting. In other words if the packet loss rate is 100%, the GetRow contains 100, a SetRow with 100 is being executed but the sequence changes the eventual parameter value into 10000. This keeps on being multiplied every time the QAction gets executed.

Whether or not to use the noset attribute doesn't seem to be having any effect in this situation. The solution is to make sure to assign "null" to the cell position of that parameter before executing SetRow calls.