Hi Dojo,

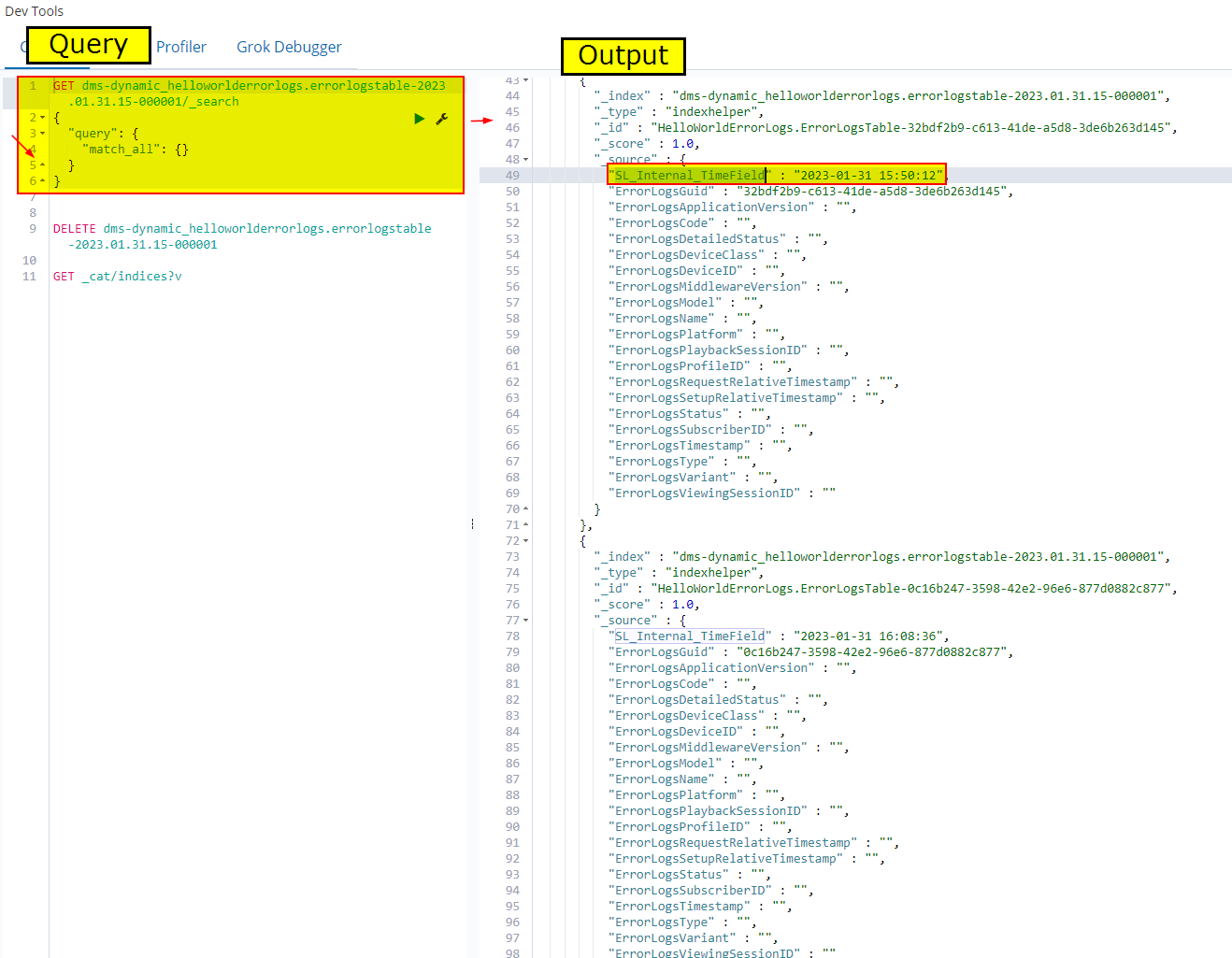

I am investigating the possibilities of Dataminer in offloading a certain error record to an ElasticSearch-tablename. By adding rows to the table below, I was able to add records in an index of ElasticSearch containing the name I chose myself \’HelloWorldErrorLogs\’ (in a certain format, for instance dms-dynamic_helloworlderrorlogs.errorlogstable-2023.01.31.15-000001).

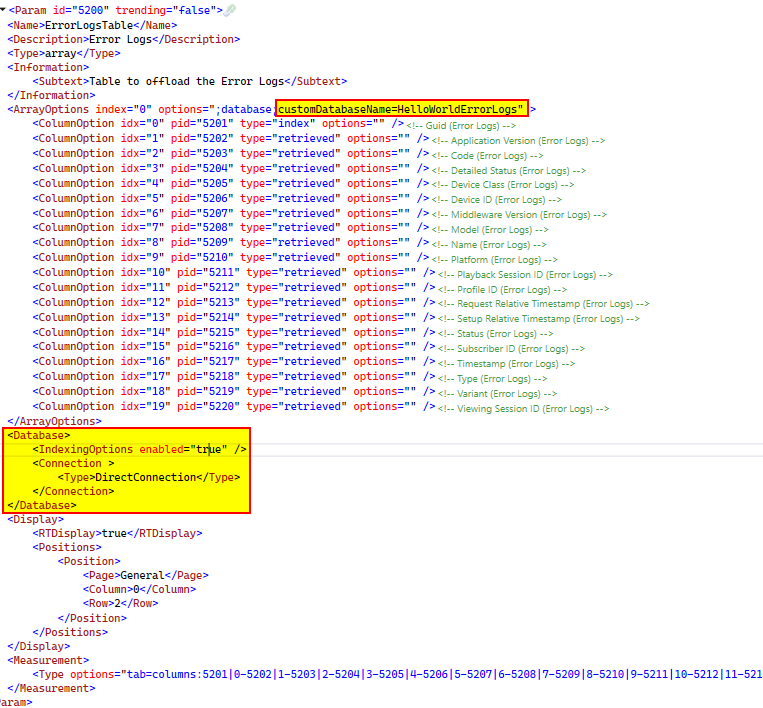

The table is implemented like this

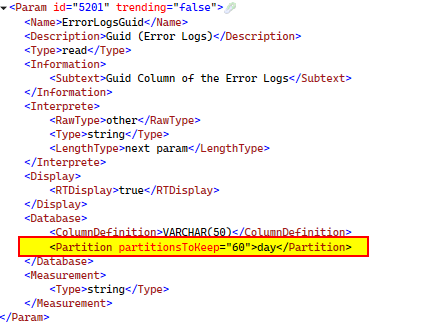

The first column is the index and is actually a Guid, in which I added another node to try to specify that it should only live 60 days in the ElasticSearch database via the Partition node. This was based on documentation about partitioning and implementing logger tables .

However, if I have a look at the documents (= ElasticSearch-word for a record) stored via Kibana I do not see a difference with the previously offloaded documents in the same table when that setting was not yet configured.

Is it possible that the TTL configuring is only configuring the TTL in DM and the logger table itself and has no influence on how long it lives in the ElasticSearch-database?

Is there a way to configure the TTL from Dataminer? Or is the only way to do this to run a repeating query on the ElasticSearch-DB apart from Dataminer where you specify to delete documents of a certain index older than a certain age (so if the SL_Internal_TimeField is longer than 60 days ago for instance)?

Kind regards,

Joachim

Thank you for the relevant extra information, Srikanth.

Hey Joachim,

TTL for loggertables in elastic does not operate on the SL_Internal_Timefield. Elastic does not have a way to define TTL on the record level. So to facilitate this we use the index.

Notice how the index is formatted as <Name>-<Date>-<seqID>. Depending on the partition you specified a new index will be created. In your case every day. This means that each of these indices will only contain a part of the data. These are all grouped under one big Alias with the same <Name>.logstable so you can search over your dataset. For your example you may notice the following in you elastic-server

- Alias: HelloWorldErrorLogs.LogsTable

- Index:HelloWorldErrorLogs.LogsTable-2023.01.31.15-000001

- Index:HelloWorldErrorLogs.LogsTable-2023.02.01.15-000001

- Index:HelloWorldErrorLogs.LogsTable-2023.02.02.15-000001

- ….

Every so often (5 minutes), the server will check these indices. During this we will check the <Date> portion of the index to the TTL (60 days in your example) you specified. If the index only contains outdated data it will be deleted.

As an example consider a table with <Partition partitionsToKeep=”3″>Hours</Partition>

After a while we will see the following in elastic

- Alias: example.LogsTable

- Index:Example.Logstable-2023.01.31.15-000001

- Index:Example.Logstable-2023.01.31.16-000001

- Index:Example.Logstable-2023.01.31.17-000001

- Index:Example.Logstable-2023.01.31.18-000001

- Index:Example.Logstable-2023.01.31.19-000001

At 2023.01.31 19:34 the check runs, and will make the following conclusions about the data

- Index 2023.01.31.15 -> 2023.01.31.19 – 3h > 2023.01.31.15 -> completely stale -> Delete

- Index 2023.01.31.16 -> 2023.01.31.19 – 3h == 2023.01.31.16 -> Mix stale and relevant data (records after 16:35)->keep

- Index 2023.01.31.17-19 -> 2023.01.31.19 – 3h < 2023.01.31.17 -> Relevant data -> Keep

This however has a couple of side effects. Since we only delete entire indices at a time, there is a chance that outdated data is still present until the index has been deleted.

Depending on your implementation there may also be some limitations regarding the usage of TTL (see: Notes on elasticSearch Loggertables)

Thank you for the elaborate answer, Brent. Using an example always helps a lot.

Joachim

Following might help you, I have came across this in the past.

https://docs.dataminer.services/user-guide/Reference/Skyline_DataMiner_Folder/More_information_on_certain_files_and_folders/DBMaintenance_xml_and_DBMaintenanceDMS_xml.html#dbmaintenancexml-and-dbmaintenancedmsxml

”

Indexing: TTL period that will override the default TTL setting in case the table in question is part of an indexing database.

From DataMiner 10.0.3 onwards, it is possible to specify TTL settings for custom data stored in the indexing database. For example, for jobs this can be configured as follows:”

6M

Thanks.