Hello,

I learned that the column type autoincrement is not compatible with Elastic DB.

Does that mean we can never use it or only in specific cases?

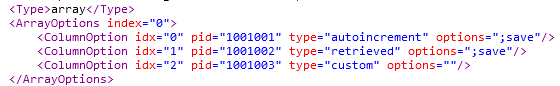

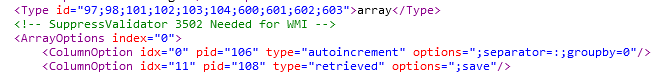

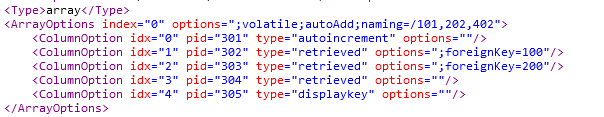

Here are already 3 examples where the autoincrement type is used today.

- add rows via QAction:

string rowKey = protocol.AddRowReturnKey(tablePid); - add key on rows added via polling: e.g. WMI

- Service Overview Manager receiving alarm data via the "autoAdd" option:

If we can no longer use this, how can we achieve the same result for all the above cases?

For case 1: modify existing driver, new version range is not needed. Change the ColumnOption type from autoincrement to retrieved.

Simply add a single, saved, parameter that stores the highest PK value of the table.

-If the single parameter is empty then read out the table to determine the highest PK value and start from there and save the new PK in the single parameter (this covers existing elements with tables that had autoincrement, if table is empty then start with PK "1" -> this covers new created elements).

-If the single parameter is not empty, then the new PK = single parameter value + 1, and save the single parameter with the new PK value.

For case 2: this depends: for some cases a QAction could fill in the column, in other cases this column is the index (PK) column. In the latter case it's better to choose another PK column (such as the instance in case of SNMP polling) -> in that case a new version range will be needed to make it compatible with elastic.

For the WMI case: I'm wondering if this auto increment is still needed? This is coming from the Microsoft Platform driver and I can't see a case where :1 or higher is present, Windows adds # number to make it already unique, resulting in :0 for all cases. As the autoincrement column is the index PK column, there is no other option but to have a new version range to get rid of it. Alternative to avoid the range change is to make the original table fully retrieved, and poll the WMI data in a hidden table in the background, then copy the data to the original table, and PK value is then fixed ending with ":0". This way the range change is avoided, it will be compatible with Elastic, but it will cost some performance.

For case 3: I have no experience with that driver how the data is entering in that table.

Indeed for case 2 we can’t update the PK, I already edited my answer but you were probably were still seeing my original comment.

The reason why this feature is not compatible: as far as I heard the “rumors” it comes down to this: autoincrement is something that is supported in MySQL, Cassandra doesn’t supported it. For this a custom autoincrement functionality has been developed by software so it can run on Cassandra. However, Elastic is running in a clustered environment and there it’s difficult because everything is distributed (who takes care of assigning a unique number as there are multiple nodes?). Clustered Cassandra will also have the same problem. So we’ve been advised to avoid using autoincrement, if the Cassandra system ever changes from one node to multiple to still have a working driver. Bottom line: having multiple nodes makes it difficult to assign something unique, locking would be needed which would completely slow down the database system (like mentioned, these are ‘rumors’, up to software department to confirm or not what the real reason is) For Elastic I can understand it, as this is working with logger tables and the content is in the DB instead of inside DataMiner, but for normal tables I don’t really understand the reasoning why not to use autoincrement as SLProtocol knows the entire table and that process could determine a new unique PK value (just like we’re mimicking it with a QAction).

Hello,

I’m not familiar with Driver development, so feel free to correct me on any faulty assumptions.

None of the cases which you’ve listed, has the database option, so none of these tables are loggertables that are written in the database/elastic? If this is true, none of these cases will be affected?

However, you are right when you say that for Cassandra Cluster & Elastic, the autoincrement feature for loggertables will most likely have to be revised. It’s indeed quite difficult to have auto increment in a clustered system. But using “auto increment” basically means adding a column to your row that will always be unique but will have no significant value to your record. I believe when reading out your record, you are not intrested in the primary key. You could use the primary key to have a sense of order, which record was inserted first. But this could be avoided with having a “DateTime” column in your record.

I believe that instead of “auto increment”, the driver could generate a GUID for primary key, or this could be a software feature? We’ve done this for netflow (direct connection only), in which the ID of the record is generated by Elastic, guaranteeing it to be unique.

This has further been investigated. Please find the conclusions below:

-Autoincrement is not deprecated when being used in a normal table (=not a logger table).

-The usage of an autoincrement in a logger table is deprecated. It still works in MySQL and in Cassandra (single node), but is not supported in Elastic. If Cassandra cluster is ever used in the background then it will also be difficult. Hence the statement that it’s deprecated. An autoincrement in a logger table is only needed to have a unique PK (because DataMiner needs a unique PK), if a PK can be omitted for logger tables, similar like elastic doesn’t need a PK column then we don’t even need to determine a unique value anymore.

To answer to the original 3 examples of the question: if you want to use these tables in an elastic database then autoincrement can’t be used. However these tables are not part of elastic. The WMI table is a table that is being used for polling, not like a logger table.

Laurens,

Thank you for your feedback.

For case 2:

how can we update the value in a QAction when it is used as Primary key?

Would this mean we need to poll the WMI data in a hidden table and duplicate the info in a displayed table completely filled in via QAction?

Would you also know the reason why this feature is not compatible?