Hi, a user is interested in storing real-time trending data for up to a period of 3 years. User is currently running a 4-DMA cluster, and on DMA-10.1.x.

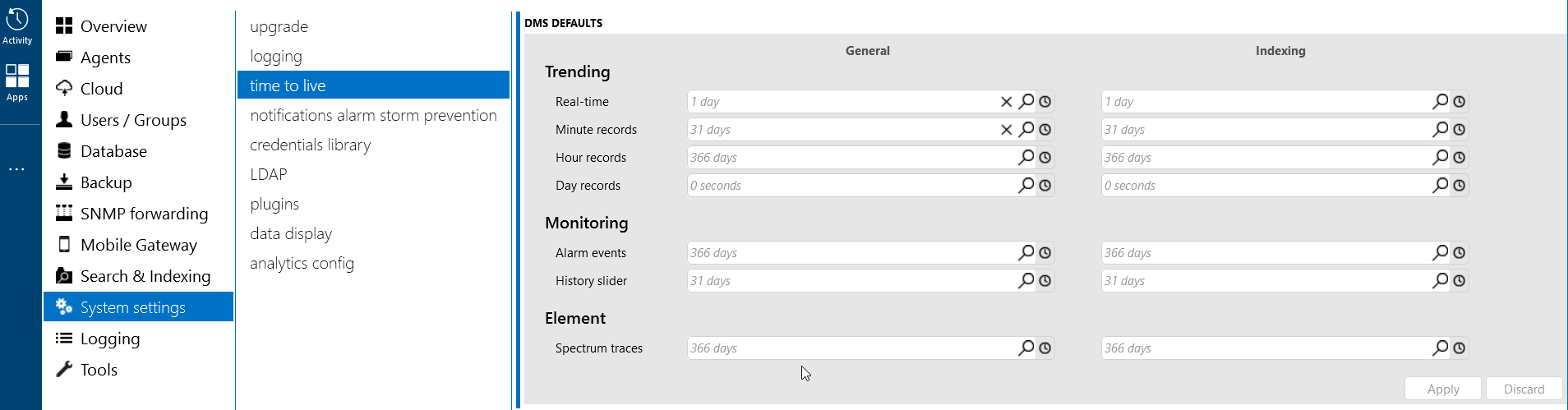

- From a DataMiner training video on the topic of Trending, it is mentioned that real-time trending data is recommended to be stored for only a couple of days (not recommended for weeks, months or years) due to the large amount of data that can be produce. It is observed that this is line with the default TTL value of 1 day.

- However it is also observed that that a max TTL value of 1098 days (approx. 3 years) for real-time trending data is allowed. Given the previous point above, are there any fine prints to be aware of when maxing out this value?

Are there any documented advice or best practice that a user intending to set real-time trending TTL to max value of 1098 should take into consideration? (e.g.: around the topic of DMA and DB Performance & Storage)

Hi Bing,

If you want to activate real-time trending for 3 years, you will need to calculate the impact on the database if you don't want to have surprises in a year or two from now...

You need to know the number of parameters which will be activated for this type of trending, and you need to know how often the parameters are being updated, and important will be to know how often they effectively change in value. Because only a change in the value of a parameter goes to the database. If the value remains the same over several poll cycles, it doesn't go to the database.

With these numbers you should be able to calculate the number of datapoints you will have after 3 years (Excel might help here). Multiply this the average size of a trend datapoint in Cassandra, which is 200 bytes, multiply again with the replication factor you want to configure in Cassandra, and this should result in the total size of the data you will have.

To allow compaction, the size of the SSDs should be double of this, and it's recommended to have max 1TB SSD for each Cassandra node (resulting in 500GB of data per node).

This way you should be able to calculate the impact on the database and how to properly scale it. And in this setup, it seems like a must to make this calculation.

If you need any help, let me know.

Bert

Bert, I was reading this post and noticed you statement regarding SSD size and compaction. Can you expand on this please or provide a link to something that explains this in detail. We will be migrating all our customers to Cassandra later this year and this sounds like something like a requirement that will need to be investigated to ensure we do not run into issues once migrated to Cassandra.

Thanks

Hi Bert, thanks for the additional detail on how to roughly estimate for DB sizing. We’ll reach out if we need further help on this.