Hello Dojo,

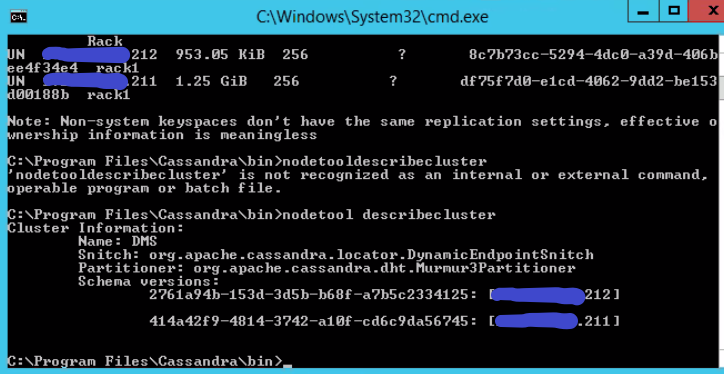

We had an issue where a failover agent suffered a complete hardware failure and needed to be reinstalled on a new machine. The backup agent was reinstalled on new hardware, but did not have a backup to restore, so a fresh 10.2 installation and upgrade to 10.2 CU11 after joining this new agent to failover we noticed a schema mismatch, after it was reported that elements were no longer working do to the previous schema being lost and a new one created.

I have attempted to resolve this mismatch by doing a nodetool drain and rolling restarts on both nodes. When that did not work we ended up breaking failover on the primary agents cube from the failover status window, reinstalling again the backup agent, setting the primary node back to localhost and from the primary node executing a nodetool removenode of the backup node as it still remained in the nodetool status after breaking failover.

After rejoining the backup agent in failover, we again have this same issue as above. It seems as though the schema conflict resides in the primary node somewhere but I am unsure how to resolve it. I am not sure where this schema mismatch could be stored in the primary node and where to go from here.

Thank you in advance for any insight and info!

Hey Ryan,

As a last resort I would wipe both Cassandra nodes away, start fresh, and restore your SLDMADB table files on the primary. After that you can attempt to configure failover again.

- Ensure failover is completely broken

- Stop all DMAs and Cassandra nodes

- Uninstall Cassandra nodes (sc delete) and remove all files/folders

- Reinstall Cassandra on both DMAs

- Connect DMAs to their respective node

- Start DMAs and ensure they function as standalone DMAs

- Stop DMA and Cassandra on primary

- Restore SLDMADB table files on primary

- Start primary and ensure functionality

- Reconfigure failover

We weren’t sure if there was an easier way to possibly just delete/append a schema table instead of a full deletion, reinstall and restore of the data tables (a huge effort).