When using the "Generic Kafka Producer" what is possible with the driver as-is?

Any customization script required or can the driver directly publish topics on an associated broker?

I can see the driver help mentions also the SNMP Manager - is this going to use the same OID as if we are relaying info directly via SNMP forwarding? What's the difference?

>> Copied from the help of the driver:

SNMP MANAGER CONFIGURATION

DataMiner receives the alarm information in the incoming SNMP inform messages/traps. Messages might be forwarded by any DataMiner Agent or by third-party software. In case a DataMiner Agent forwards them, the SNMP Manager (Apps > System Center > SNMP Forwarding) settings should be configured as follows:

-

SNMP version: SNMPv2 or SNMPv3.

-

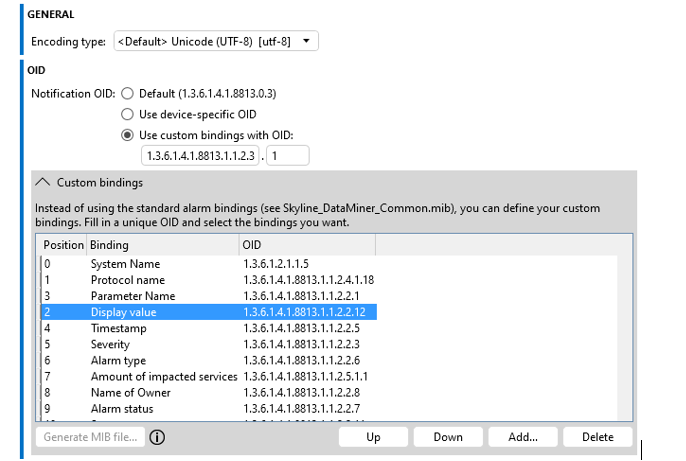

Notification OID: Needs to match the Custom Bindings Object ID displayed when you click the More Configurations page button on the Alarms page. Otherwise, received inform messages/traps will not be processed.

-

The custom bindings table should be filled in.

Hi Alberto

With the Generic Kafka Producer, DataMiner acts as a producer and can publish information about alarms and parameter values (standalone parameters and/or table parameters) to a specific topic.

After configuring and setting up the Kafka cluster, there is nothing else you need to do besides deploying the connector.

Regarding SNMP Manager configuration, standard SNMP forwarding functionality is used to filter the alarms and define the information to forward. At each DataMiner agent, it is necessary to create at least one SNMP manager. The SNMP manager will then send the information using Inform messages via the loopback interface to the Kafka Producer element.

In the SNMP Manager, it will be possible to:

- Define the required alarm information that should be forwarded. To achieve this, custom binding OIDs will be used

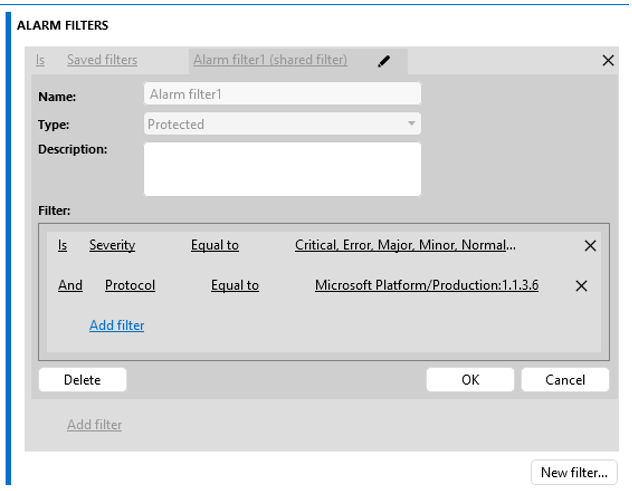

- Define which alarms should be forwarded. To achieve this, alarm filters could be defined

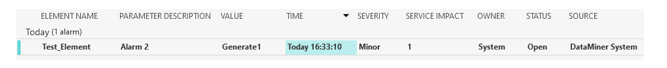

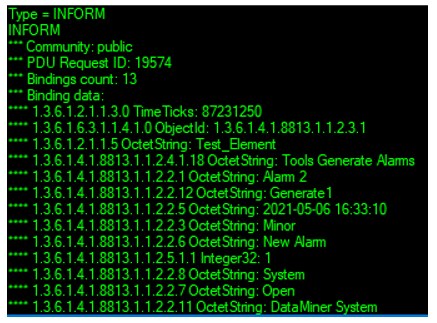

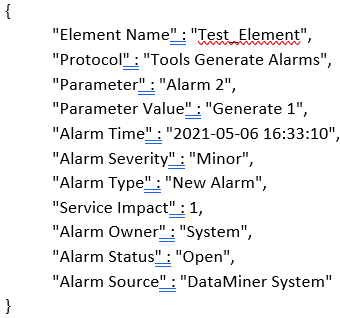

Each alarm will be processed, and the information will be sent in JSON format using key/value pair-based messaging. For example, the alarm:

Will be sent as:

and it will be changed to:

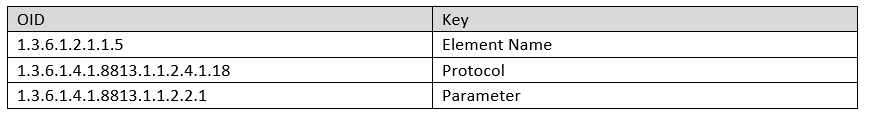

The alarms table on the configuration page is where it will be possible to define the OID and the key that will replace it, like:

Please let me know if I can further assist you.

Regards,

Thank you so much for this thorough description, Tiago, much appreciated.

I was afraid that the connector for the Producer would require some additional code to be written in order to work, but now that I have this step-by-step guide, I’ll check if we can set up a POC.

Marking this as solved – thanks again!