Hi, a user has the following use case that is currently supported on their existing fault management system. They are transitioning to DataMiner and phasing out their existing system and would like to understand if it is possible to implement the same behavior:

Use case to support:

(1) An alarm is raised at a particular severity level that is not 'Critical' (e.g.: Warning/Minor/Major).

(2) If the alarm is not acknowledged after a configurable period (e.g.: 60s, 1800s, 3600s) then the alarm is automatically increased to a higher severity without additional input from the device (the data value that triggered the initial alarm does not change). So DataMiner keeps track of the time-elapsed and also whether an alarm is acknowledged and increases the severity if conditions are met.

Attempting to solve this using hysteresis comes to mind. However, the problem that hysteresis solves is fundamentally different. Its purpose is to prevent alarm from being registered or escalated immediately when there is a change in the data value that triggers the alarm (i.e.: prevents alarm flapping). Important to note that a change in data value polled/reported from the device plays a part here while in the use case above, there is none.

Any advice on how to handle the use case we are trying to solve is welcome.

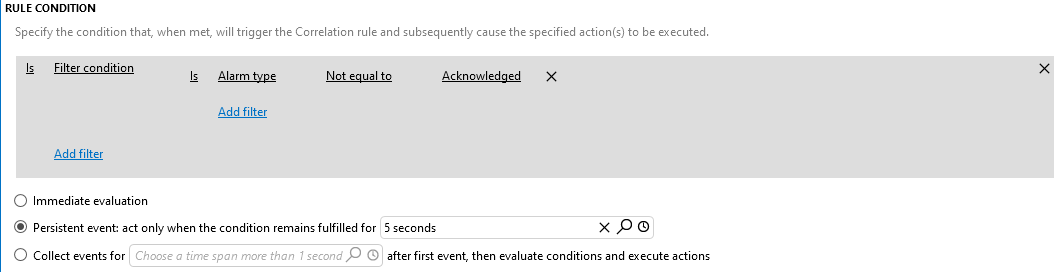

This can be done using the correlation module. You can create a correlation rule and set a rule condition 'alarm type not equal to Acknowledged' and set the rule to trigger only if the condition is met for more than a specified time, as below

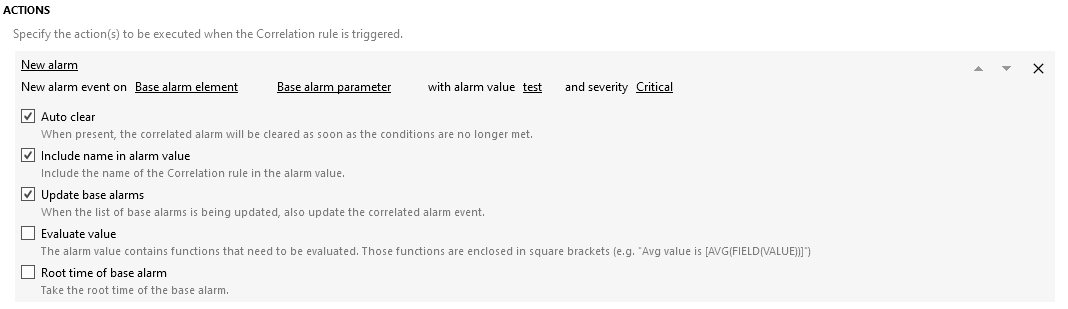

Under 'Actions' you can then let the rule create a new alarm with a specified severity and the original alarm as base alarm

Finally, to make sure that each alarm gets a separate critical alarm associated to it, you will have to set 'Alarm Grouping' to grouping by alarm

Under 'Alarm Filter' you can configure to which alarms you want to apply this rule.

Thanks Tobe! Your suggestion sounds promising. I’ll test this out with the user.