Hello Dojo,

We had an issue where a failover agent suffered a complete hardware failure and needed to be reinstalled on a new machine. The backup agent was reinstalled on new hardware, but did not have a backup to restore, so a fresh 10.2 installation and upgrade to 10.2 CU11 after joining this new agent to failover we noticed a schema mismatch, after it was reported that elements were no longer working do to the previous schema being lost and a new one created.

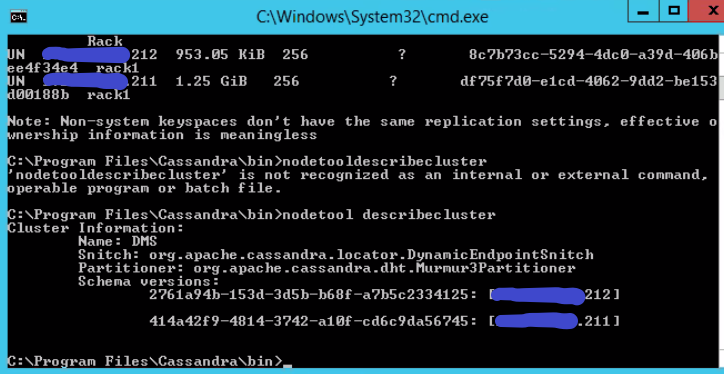

I have attempted to resolve this mismatch by doing a nodetool drain and rolling restarts on both nodes. When that did not work we ended up breaking failover on the primary agents cube from the failover status window, reinstalling again the backup agent, setting the primary node back to localhost and from the primary node executing a nodetool removenode of the backup node as it still remained in the nodetool status after breaking failover.

After rejoining the backup agent in failover, we again have this same issue as above. It seems as though the schema conflict resides in the primary node somewhere but I am unsure how to resolve it. I am not sure where this schema mismatch could be stored in the primary node and where to go from here.

Thank you in advance for any insight and info!

Hey Ryan. You mentioned that the backup agent was reinstalled from scratch with the 10.2 installer. Was the main agent installed with the same installer? Can you check that the Cassandra versions are the same? If there is a version mismatch, this might be causing the Cassandra nodes to avoid being in the same schema.

this is correct, the primary was running on Cassandra 3.7 and the new ISO installs 3.11. After downgrading the secondary node to 3.7 we got rid of the schema conflict and all is operational again! Thank you!