example Use Case

Process Automation Use Case – Monitoring AWS Media Services

Cloud services are on the rise, and the broadcast and media industry is keeping up with the latest technology trends by cutting down costs, enhancing performance and delivering more resilient services.

Monitoring services that rely on the cloud can be extremely difficult as workflows can contain elements located in the cloud, as well as elements located on premises.

The ability to automate the monitoring of such complex monitoring workflows is exactly what makes DataMiner a one-of-a-kind platform.

With state-of-the-art DataMiner solutions such as SRM (Service Resource Manager) and PA (Process Automation), you can automate the monitoring of all components of a given service and assign them a DataMiner service in which all of the different workflow components are bundled together, providing you visibility of the service itself and all its dependencies.

In this use case, we will be presenting:

- Targeted architecture to monitor

- Process Automation for monitoring

- Dedicated service view for each channel

USE CASE DETAILS

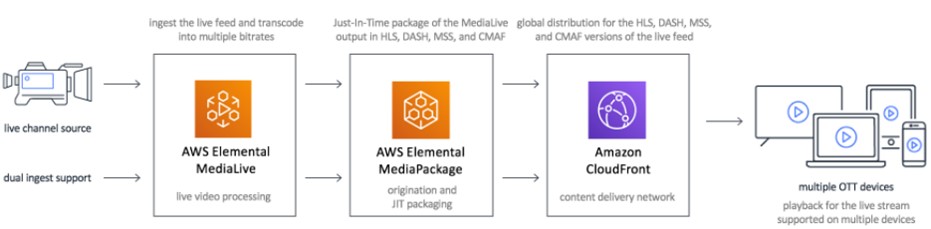

Targeted architecture to monitor - In this use case, we will be using a simple streaming service deployed in AWS Media Services, as depicted in this image.

Targeted architecture to monitor - In this use case, we will be using a simple streaming service deployed in AWS Media Services, as depicted in this image.

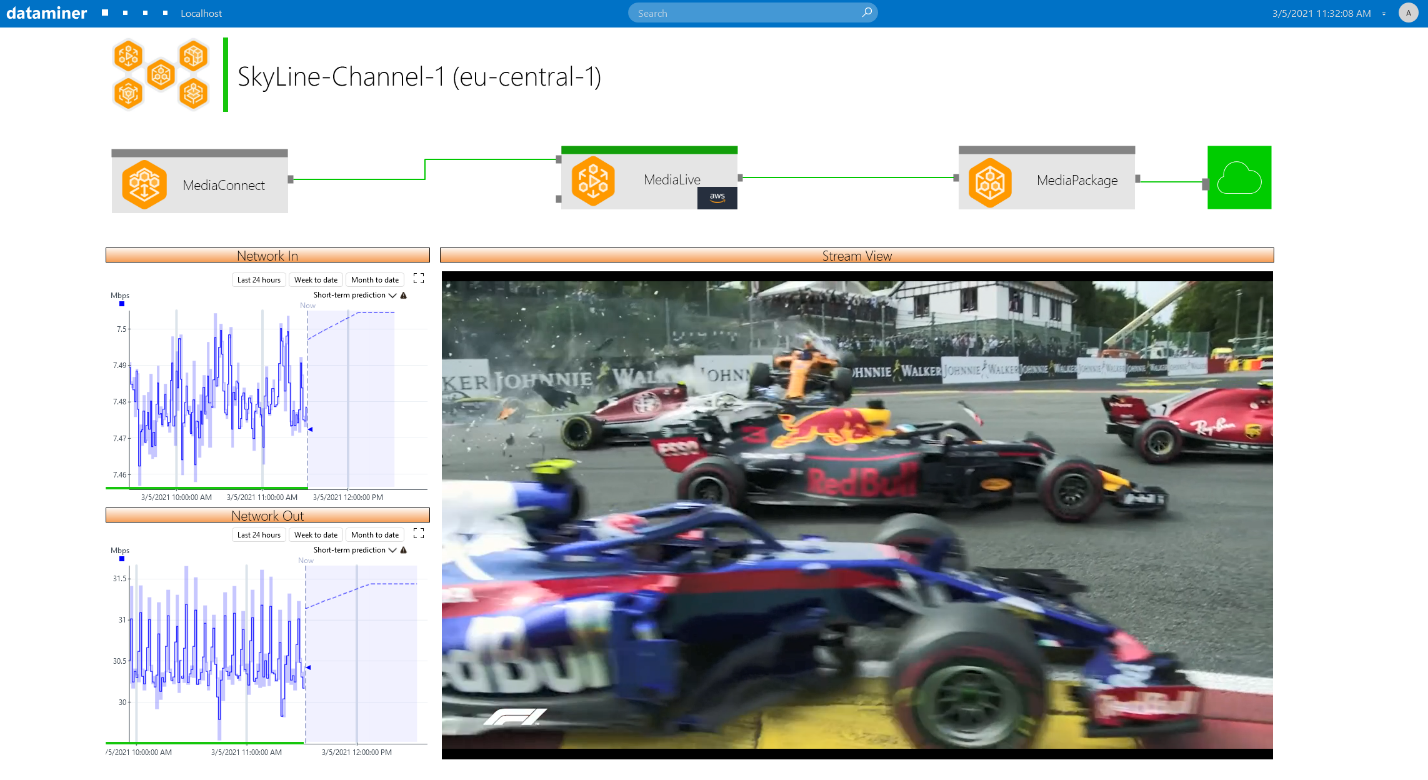

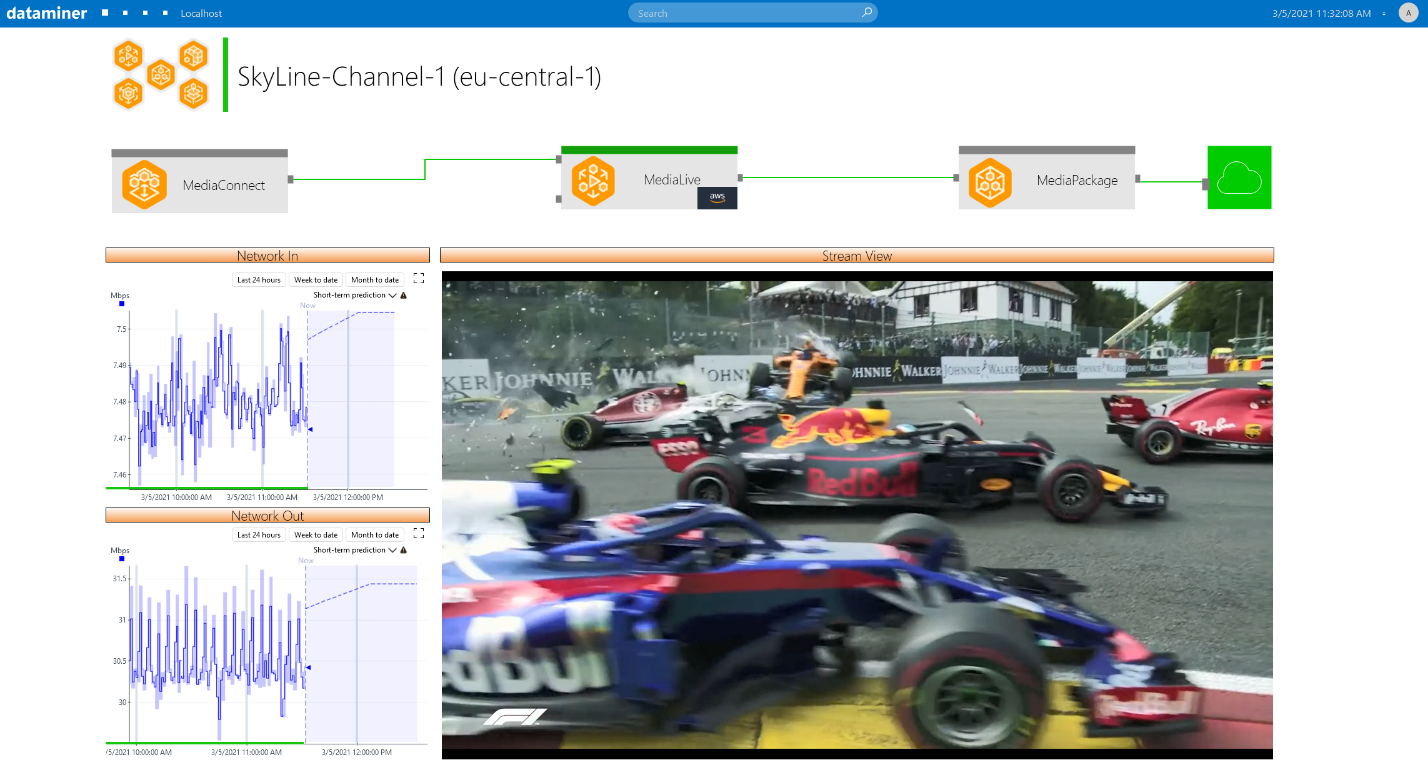

Targeted architecture to monitor – In DataMiner, we create a series of DataMiner services that centralize the MediaConnect flows, the MediaLive channels, and the MediaPackage playout in order to monitor all cloud services that make up the streaming workflow.

Targeted architecture to monitor – In DataMiner, we create a series of DataMiner services that centralize the MediaConnect flows, the MediaLive channels, and the MediaPackage playout in order to monitor all cloud services that make up the streaming workflow.

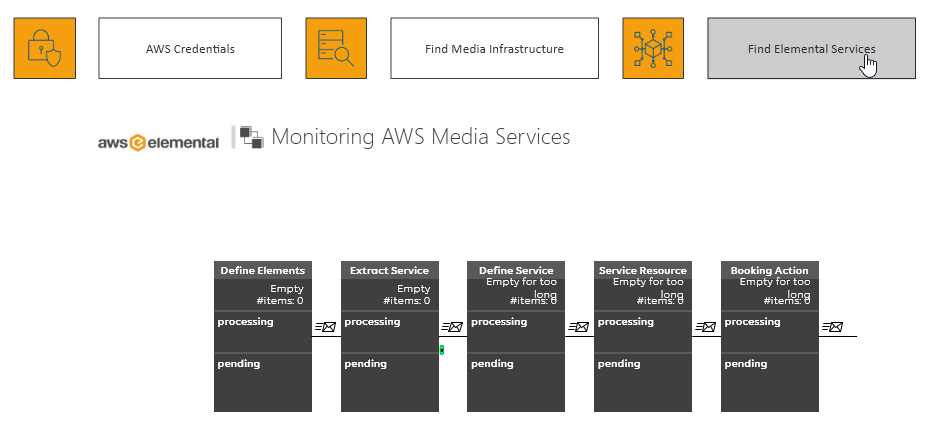

Process Automation for monitoring - The process that orchestrates the gathering of all monitoring components can be broken down in 5 steps.

Process Automation for monitoring - The process that orchestrates the gathering of all monitoring components can be broken down in 5 steps.

Step 1 – Define elements: First, DataMiner identifies the elements that will make up the services. These elements, which are created based on DataMiner protocols, actively collect alarms and metrics.

Step 1 – Define elements: First, DataMiner identifies the elements that will make up the services. These elements, which are created based on DataMiner protocols, actively collect alarms and metrics.

Step 2 – Extract Service: In this step, the Channels table needs to be defined so that DataMiner can generate a token for each service. By generating a token per service, DataMiner can streamline the work by queuing the execution of each subsequent step.

Step 2 – Extract Service: In this step, the Channels table needs to be defined so that DataMiner can generate a token for each service. By generating a token per service, DataMiner can streamline the work by queuing the execution of each subsequent step.

Step 3 – Define Service: In this step, the service definition is verified. This definition contains the actual structure of the service.

Step 3 – Define Service: In this step, the service definition is verified. This definition contains the actual structure of the service.

Step 4 – Service Resource: In this step, the services are analyzed and DataMiner components such as resources and profiles are generated to support the creation of a DataMiner service that will bundle all workflow components.

Step 4 – Service Resource: In this step, the services are analyzed and DataMiner components such as resources and profiles are generated to support the creation of a DataMiner service that will bundle all workflow components.

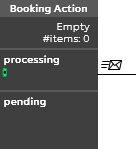

Step 5 – Booking Action: In this step, all workflow components are assembled and a DataMiner service is created in the Surveyor. This will provide full visibility of the components.

Step 5 – Booking Action: In this step, all workflow components are assembled and a DataMiner service is created in the Surveyor. This will provide full visibility of the components.

This process can be running for as long as needed. It will periodically check for new services and pro-actively stop monitoring services that have been decommissioned.

This process can be running for as long as needed. It will periodically check for new services and pro-actively stop monitoring services that have been decommissioned.

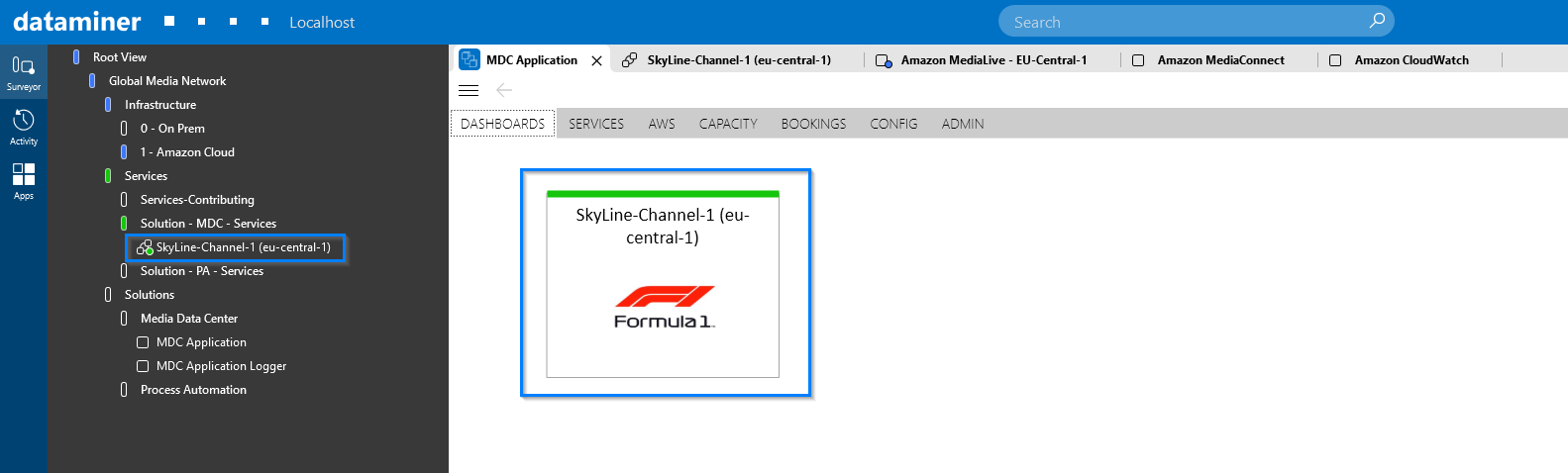

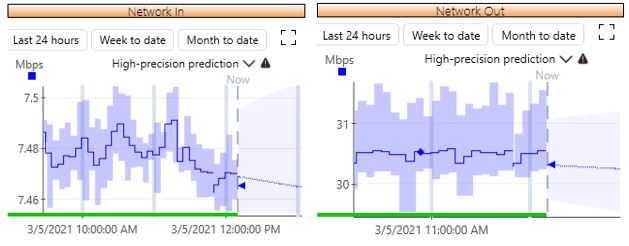

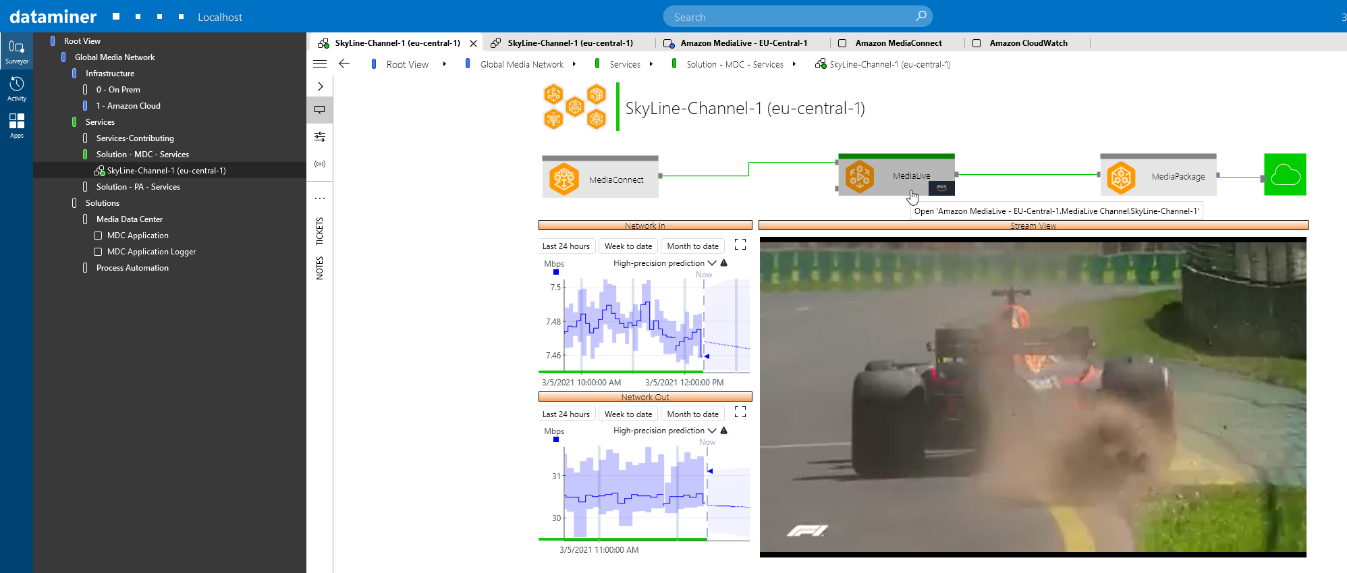

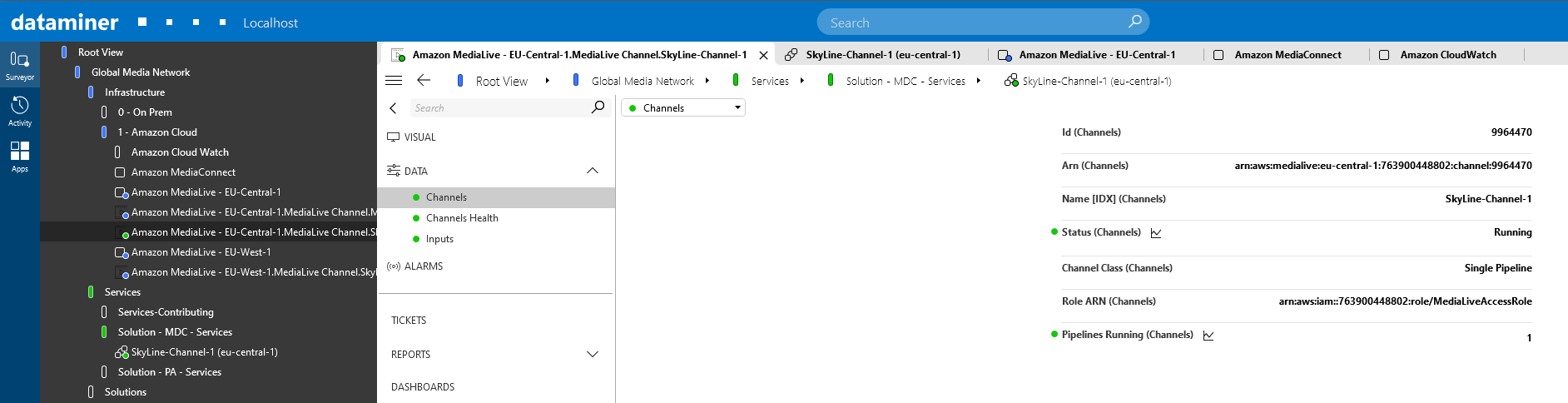

Dedicated service view – Users can actively monitor every aspect of every service that is created by monitoring KPIs over time using built-in trending features.

Dedicated service view – Users can actively monitor every aspect of every service that is created by monitoring KPIs over time using built-in trending features.

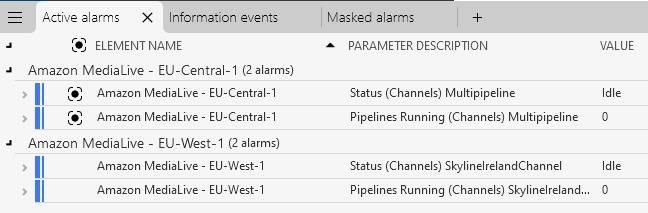

Dedicated service view – Users can also actively monitor every aspect of every service that is created by configuring alarm templates so that an event will be generated each time a channel state is updated.

Dedicated service view – Users can also actively monitor every aspect of every service that is created by configuring alarm templates so that an event will be generated each time a channel state is updated.

Dedicated service view - Users can also actively monitor every aspect of every service that is created by viewing the live feed that is being transmitted towards the CDN.

Dedicated service view - Users can also actively monitor every aspect of every service that is created by viewing the live feed that is being transmitted towards the CDN.

All the above mentioned features are assembled in a dedicated service view.

All the above mentioned features are assembled in a dedicated service view.

In this service view, which displays the KPIs, the alarm state and the stream itself, users can quickly navigate to one of the elements (depicted by grey squares) when further analysis is needed.

In this service view, which displays the KPIs, the alarm state and the stream itself, users can quickly navigate to one of the elements (depicted by grey squares) when further analysis is needed.

This use case can be extended with several other actions tailored to specific needs: 1) Automated AWS Service usage lookup to automatically create elements in DataMiner across all AWS regions. 2) Different service setup using other AWS components. 3) On-premises and off-premises topologies. 4) Integration with other cloud vendors.

This use case can be extended with several other actions tailored to specific needs: 1) Automated AWS Service usage lookup to automatically create elements in DataMiner across all AWS regions. 2) Different service setup using other AWS components. 3) On-premises and off-premises topologies. 4) Integration with other cloud vendors.