Nodetool status flowchart

Ensuring that all data is replicated

To ensure that all data is replicated, you should run the nodetool repair command.

- First, try with:

nodetool repair -full SLDMADB - If this fails, use the following command:

nodetool repair -full -tr SLDMADB >> test.txt

In a new command prompt window, you can monitor a nodetool repair operation with two nodetool commands:

compactionstatsnetstats

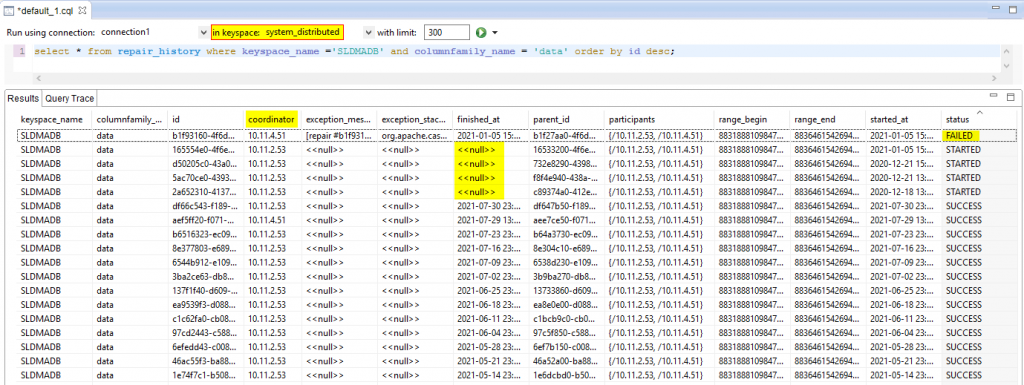

Review the repair history in DevCenter with the following query:

select * from repair_history where keyspace_name ='SLDMADB' and columnfamily_name = '[TABLE_NAME]' order by id desc;

This will return information on the latest repair for a particular SLDMADB table, as illustrated in the image below.

- The "coordinator" column indicates which node initiated the repair.

- The "finished_at" column shows a timestamp that can help you trace problems. If the "status" column mentions "FAILED", as illustrated above, check the logging of both Cassandra nodes for exceptions, while referring to the "finished_at" column for timestamp information.

- A <<null>> value in the "finished_at" column indicates no time was retrieved (e.g. still busy or restarted during that time).

- For a multi-node system, it is important that there are some successful repairs.

Checking Cassandra logging for long or frequent garbage collection pauses

These will cause Cassandra to be unable to write, as this operation takes the Cassandra node offline.

Check if you can find similar GC info events as in the following example in your system.log file:

INFO [ScheduledTasks:1] 2013-03-07 18:44:46,795 GCInspector.java (line 122) GC for ConcurrentMarkSweep: 1835 ms for 3 collections, 2606015656 used; max is 10611589120

INFO [ScheduledTasks:1] 2013-03-07 19:45:08,029 GCInspector.java (line 122) GC for ParNew: 9866 ms for 8 collections, 2910124308 used; max is 6358564864Causes of garbage collection pauses include:

- Recent application changes: If the problem is recent, check if any applications changes have recently occurred.

- Excessive tombstone activity: This is often caused by heavy delete workloads.

- Large row or batch updates: To resolve this, reduce the size of the individual write to less than 1 Mb.

- Extremely wide rows: This manifests as problems in repairs, selects, caching, and elsewhere.